At Apriorit, we have significant expertise in developing embedded systems and embedded software based on field-programmable gate array (FPGA) technology. The flexible, reusable nature of FPGAs makes them a great fit for different applications, from driver development to data processing acceleration.

FPGAs can be programmed for different kinds of workloads, from signal processing to deep learning and big data analytics. In this article, we focus on the use of FPGAs for Artificial Intelligence (AI) workload acceleration and the main pros and cons of this use case.

Contents

The origins of FPGA technology

Field-programmable gate arrays (FPGAs) have been around for a few decades now. These devices have an array of logic blocks and a way to program the blocks and the relationships among them. An FPGA is a generic tool that can be customized for multiple uses. In contrast to classic chips, FPGAs can be reconfigured multiple times for different purposes. To specify the configuration of an FPGA, developers use hardware description languages (HDLs) such as Verilog and VHDL.

Modern FPGAs work pretty similarly to application-specific integrated circuits (ASICs): when re-programmed properly, FPGAs can match the requirements of a particular application just as a regular ASIC. And when it comes to data processing acceleration, FPGAs can outperform graphics processing units (GPUs).

Intel recently published research in which they compared two generations of Intel FPGAs against a GPU by NVIDIA. The main goal of the experiment was to see whether the future generation of FPGAs could compete with GPUs used for accelerating AI applications.

In its paper, Intel concludes that one of the tested FPGA AI accelerators, the Intel Stratix 10, can outperform a traditional GPU. This becomes possible when using compact data types instead of standard 32-bit floating-point data (FP32). Calculations must be simplified while maintaining an appropriate level of accuracy.

But is this potential enough to make an FPGA the right answer to the problem of AI application acceleration? Let’s dig deeper in the next section.

Do AI applications really need FPGAs?

Before answering this question, let’s take a closer look at the problem of data processing in AI-related technologies. The use of AI-based solutions is growing fast in multiple areas, including (but not limited to) advertising, finance, healthcare, cybersecurity, law enforcement, and even aerospace.

Colorado-based market intelligence firm Tractica predicts that revenue from AI-based software will reach as much as $105.8 billion by 2025. But as the popularity of AI technologies rises, developers face two challenging tasks:

- Figuring out how to process and transfer data faster

- Figuring out how to improve the overall performance of AI-based applications

Luckily, FPGAs might be the solution to both of these challenges.

But do all AI-based applications really need FPGAs? Of course not. The biggest potential of FPGAs is in the area of deep learning.

AI applications in need of FPGA technology

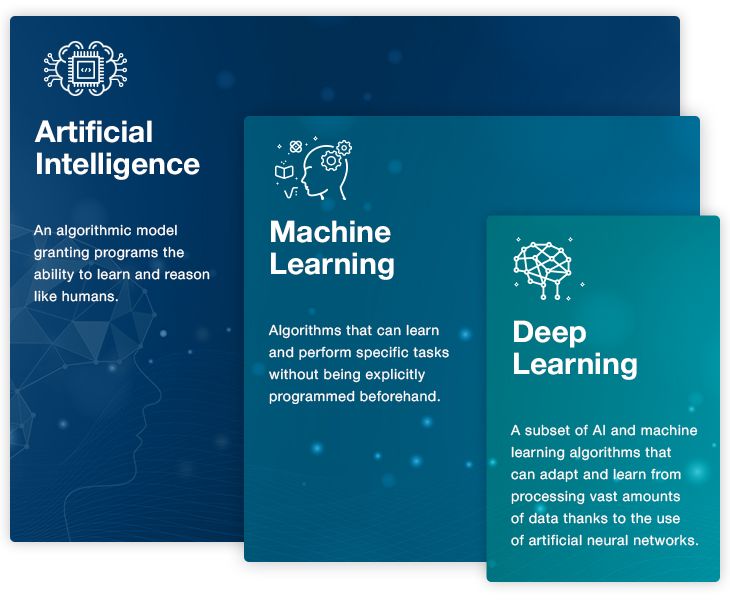

When we talk about modern AI, we usually talk about one of three things: the general idea of artificial intelligence, machine learning, or specifically deep learning.

Figure 1. The connection between Artificial Intelligence, machine learning, and deep learning

The general idea of Artificial Intelligence, with machines possessing the intelligence of humans, has been around since at least the middle of the 20th century. Machine learning blossomed much later, starting in the 1980s.

Machine learning is based on parsing data, learning from it, and using that knowledge to make certain predictions or “train” the machine to perform specific tasks. For learning, machine learning systems use different algorithms: clustering, decision tree, Bayesian networks, and so on.

Deep learning is basically an approach to machine learning. An important part of it are neural networks, resource-hungry and complex learning models that were nearly impossible to use for real-world tasks before the first GPUs and parallel task processing were invented.

The process of using a neural network model can be split into two stages: the development, when the model is trained, and the runtime application, when you use the trained model to perform a particular task.

For instance, let’s consider image recognition tasks. In order to be able to distinguish, for example, different types of road signs, a neural network model must process lots of pictures, reconfiguring its internal parameters and structure step by step to achieve acceptable accuracy on training sets. Then the trained model is shown a picture and has to identify what kind of a road sign is in it. Check out our article to learn more about artificial intelligence image recognition processes.

This is where FPGAs come into the picture. To be successful, inferencing requires flexibility and low latency. FPGAs can solve both these problems. The reprogrammable nature of an FPGA ensures the flexibility required by the constantly evolving structure of artificial neural networks. FPGAs also provide the custom parallelism and high-bandwidth memory required for real-time inferencing of a model.

One more plus is that FPGAs are less power-hungry than standard GPUs.

FPGA-based Acceleration as a Service

FPGA-based systems can process data and resolve complex tasks faster than their virtualized analogs. And while not everyone can reprogram an FPGA to perform a particular task, cloud services bring FPGA-based data processing services closer to customers. Some cloud providers are even offering a new service, Acceleration as a Service (AaaS), granting their customers access to FPGA accelerators.

When using AaaS, you can leverage FPGAs to accelerate multiple kinds of workloads, including:

- Training machine learning models

- Processing big data

- Video streaming analytics

- Running financial computations

- Accelerating databases

Some FPGA manufacturers are already working on implementing cloud-based FPGAs for AI workload acceleration and all kinds of applications requiring intense computing. For instance, Intel is powering the Alibaba Cloud AaaS service called f1 instances. The Acceleration Stack for Intel Xeon CPU with FPGAs, also available to Alibaba Cloud users, offers two popular software development flows, RTL and OpenCL.

Another big company joining the race to build an efficient AI platform is Microsoft. Their project Brainwave offers FPGA technology for accelerating deep neural network inferencing. Similarly to Alibaba Cloud, they use Intel’s Stratix 10 FPGA.

While Intel obviously leads the FPGA market in the AI application acceleration field, another large FPGA manufacturer, Xilinx, intends to join the competition. Xilinx has announced a new SDAccel integrated development environment meant to make it easier for FPGA developers to work with different cloud platforms.

Pros and cons of using FPGAs for AI workload acceleration

FPGA technology shows great potential for accelerating AI-related workloads. However, it also has numerous drawbacks that you need to keep in mind when considering using an FPGA for accelerating data processing. Let’s look at the most important pros and cons of using an FPGA for accelerating AI applications.

Advantages of FPGA technology:

- Flexibility – The ability to be reprogrammed for multiple purposes is one of the main benefits of FPGA technology. Regarding AI-related solutions, separate blocks or an entire circuit can be reprogrammed to fit the requirements of a particular data processing algorithm.

- Parallelism – An FPGA can handle multiple workloads while keeping application performance at a high level and can adapt to changing workloads by switching among several programs.

- Decreased latency – An FPGA has larger memory bandwidth than a regular GPU, reducing latency and allowing it to process large amounts of data in real time.

- Energy efficiency – Machine learning and deep learning are resource-hungry solutions. But it’s possible to ensure a high level of application performance at low power for machine learning by using an FPGA.

Disadvantages of FPGA technology:

- Programming – The flexibility of FPGAs comes at the price of the difficulty of reprogramming the circuit. There aren’t enough experienced programmers on the market.

- Implementation complexity – While using FPGAs for accelerating deep learning looks promising, only a few companies have tried to implement it in real life. For most AI solution developers, a more common tandem of GPUs and CPUs might look less problematic.

- Expense – The first two drawbacks inevitably make the use of an FPGA for accelerating AI-based applications an expensive solution. The cost of multiple reprogrammings of a circuit is considerably high for small-scale projects.

- Lack of libraries – There are few to no machine learning libraries supporting FPGAs out of the box. However, there’s a promising project, LeFlow, started by a team of researchers from the University of British Columbia. This team wants to make it possible to use FPGAs with the TensorFlow machine learning framework, making it easier for Python developers to use FPGAs for testing machine learning problems.

Conclusion

FPGAs deserve a place among GPU and CPU-based AI chips for big data and machine learning. They show great potential for accelerating AI-related workloads, inferencing in particular. The main advantages of using an FPGA for accelerating machine learning and deep learning processes are their flexibility, custom parallelism, and the ability to be reprogrammed for multiple purposes.

However, the concept of AI powered by FPGAs needs further development. As of today, only two major IT companies, Alibaba and Microsoft, offer FPGA-based cloud acceleration to their customers. The lack of manufacturers offering circuits that are able to handle such high-level workloads also prevents this concept from blossoming.

As AI software development company, we have a team of experienced programmers who have mastered the art of embedded system development and data processing. Knowing all the ins and outs of FPGA technology, we’ll gladly assist you in finding a way to use this technology to the benefit of your projects.

Read more about how AI can enhance your next project below!