Reducing a test set without losing coverage of major test cases is one of the main goals of any tester working on test design. In this article, we will discuss how to reduce the number of tests without losing major ones using the analysis of interconnections and the inner logic of a product. This article will be mainly useful for testers who often test different windows with a number of interconnected options and parameters.

So let’s see to which products and how we can apply the analysis of interconnections…

Contents:

Products to Which Interconnection Analysis Can Be Effectively Applied

It is reasonable to apply the analysis of interconnections while testing products (or separate components) that work with different types of input data and have several types of output data. To cover all functionality while designing tests for such products, we need to test a number of options and parameters that depend on each other. This leads to the necessity of creating and performing a great number of tests, which, in fact, are rarely performed to the full extent.

Let’s consider an example of such a product.

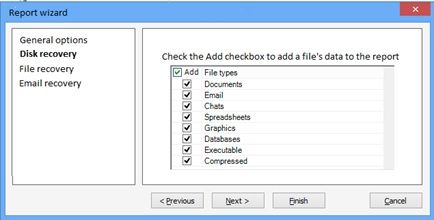

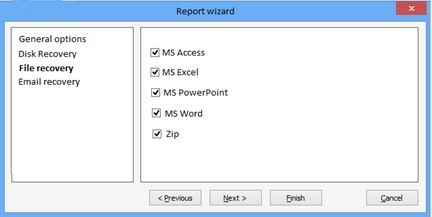

For example, let’s take a tool for data recovery. Suppose, our tool is capable of recovering deleted data from physical and logical disks, damaged files (MS Access, MS excel, MS Word, etc.), and deleted mail messages from MS Outlook, Outlook Express, and Windows Mail databases.

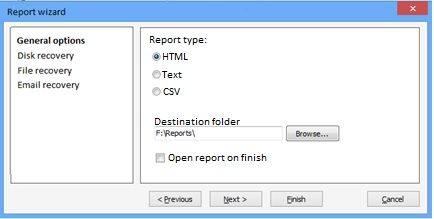

The tool we consider can generate a report on the results of data recovery in HTML, CSV, or Text format after the deleted data is recovered.

The Reports generation wizard for our product will look approximately as follows:

Let’s identify the problem: we need to test a wizard that has a number of different options that depend on each other. In addition, we have several types of input data, on which these options must be tested and several types of output data.

This wizard requires writing test cases, in which we must cover all the input data and test the results of work. Meanwhile, we need to optimize the number of tests as best as we can.

Optimizing Number of Tests during Wizard Options Testing

First of all, we need to determine the connections between the options and other entities and determine what data and results different options affect.

We have the following connections in our wizard:

- Between input data and a result

- Between input/output data and options

- Between different options

Let’s look more closely at each connection…

Analysis of Connection of Input Data and Result

The connection between input data and a result is the first.

We have the following types of input data in our wizard:

- Physical disks

- Logical disks

- MS Access files

- MS Excel files

- MS PowerPoint files

- MS Word files

- Zip archives

- MS Outlook email databases

- Outlook express email databases

- Windows Mail email databases

I.e. we have 10 different types of input data that can be combined into three groups:

- disks from which we perform the recovery of deleted files (logical and physical disks);

- damaged files, which we recover (MS Access, MS Excel, MS PowerPoint, MS Word, and Zip archives);

- email databases from which we recover deleted email messages (MS Outlook, Outlook express, and Windows Mail).

After we have determined the groups of input data, we start the analysis of interconnections. For this purpose, we need to study the specifics of the inner implementation and the architecture or our component.

We can access the code and study the inner structure of the system on our own. But the fastest and most convenient way to obtain the knowledge of the inner structure of the system is the discussion with experienced developers. After each type of input data and its influence on a result were discussed in detail, we can exclude the types of data that do not influence results. Thus, we can divide the input data into classes of equivalence using our knowledge of the inner implementation of the system.

In our case, we know that the deleted files recovery algorithm works equally for both the logical and the physical disk and thus we can combine the disks into one class of equivalence. In much the same way, we combine the damaged files into the second class, and the email databases into the third class. I.e. there is no sense in testing all 10 types of input data: testing one from each group and generating all types of reports for the selected type would be enough. For example, we can generate an HTML, Text, and CSV reports for logical disks, MS Excel files, and MS Outlook email database.

Analysis of Connection of Options and Input/output Data

The connection of input/output data and options is the second.

We need to determine the data each option affects or doesn’t affect.

It is best to analyze all options in sequence for this purpose. We need to determine the following for each option: what input data it is required for, where it is used, and what types of reports it affects. After analyzing the options of our wizard, we have the following groups:

- Common options that depend neither on input data, nor on output data;

- Input and output data options, which include options that depend on input data and affect final result. I.e. we must test these options with each group of input data and with all types of reports.

For example:

Common options

- The Destination folder option: a user defines the location to which a report will be saved. It must be tested (with a network folder, not existing folder, etc.), but it does not depend on input data and doesn’t influence results. That is why we must test it separately, without binding it to input/output data. I.e. to test this option, we need to test the saving of a report to a network folder, a not existing folder, a deeply nested folder, a folder with no access to it, etc. with one type of input data and with a report in one of the formats.

We have two such options: Destination path and Open report on finish.

Input/output data options

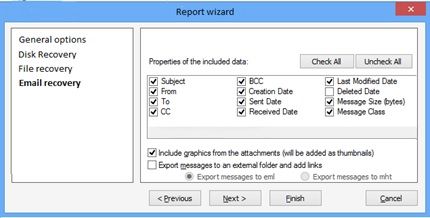

Most of our options are of this type. For example, let’s look at options on the Email recovery page:

- The Properties of the included data group of options: a user selects the properties of email messages to be included to a report. I.e. these options affect the final result and depend on input data. In theory, to cover these options completely, we would require to generate all types of reports for each type of database with each option separately. We would also require testing of all possible combinations of options. But first, we need to analyze the influence of input data on each option. As we did earlier, we need to study the inner architecture of the system and discuss the specifics of the inner architecture with developers. This would allow us to determine if we need to test each option with all types of email databases or if testing with one type would be enough. The algorithms for reading the properties from databases are the same for all three types of databases in our example. Thanks to this, we can test options only with one type of database. In much the same way, after studying the inner structure of the system, we determine the degree of dependency of each option on the type of report. In our case, the algorithms for writing each property to a report are the same for all formats of reports, i.e. it is enough to generate one type of report for one type of email database for each option.

Thus, we need to test the functioning of 12 options by creating one type of report for one type of email database.

We optimize the number of tests for combinations of options using the Pairwise technique (more details on the technique can be found here: http://pairwise.org/).

- The Include graphics from the attachments option also affects the content of a report. But this option is selected by default. I.e. all the reports we have previously created were generated with this option selected. That is why instead of adding tests for generation of all types of reports with this option selected, we add one test for generation of one report with this option not selected.

- The Export messages to an external folder option will be considered further.

Analysis of Interconnection of Options

The interconnection of options is the third.

We consider options that make other options available.

For example, the Export messages to an external folder option makes two other options available: the export in the EML and MHT formats.

First of all, we analyze the dependency of the parent option on the input data. In our example, this option is the only option that depends on the type of input data. I.e. we need to test this option with each type of email database. Then we analyze the influence of options on results. In our case, these options do not affect the type of the output format of a report. I.e. to test this option, it will be enough to generate one type of a report for all types of databases with export to EML and to MHT respectively.

In most cases, the testing of such connected options is already covered on the previous stages, when the analysis of connection of input/output data and options is performed.

Formatting Results for Reviewer

In most cases, after test cases were designed, they must undergo a static testing, i.e. other members of a testing team must review them. Taking into account that we have performed quite deep analysis while designing test cases, it would be reasonable to create a high-level document for an intermediate review before writing complete test cases with steps.

This document must be reviewed by other members of a testing team, as well as by a team of developers, who have expert knowledge of our system. This would allow us to apply the knowledge of all experienced developers to the analysis of the inner structure of a product and not to miss any important tests.

The document must be small and easy to read. You can format it as follows:

1. General Options

1.1. Destination: add test cases for testing destination to different places

1.2. Open report on finish: generate one type of report with selected and not selected option

2. Email Recovery Options

2.1. The Include graphics from the attachments option: generate one type of report with not selected option

2.2. The Properties of included data options: for each option, generate one type of a report for one type of email database

2.3. The Properties of included data options: generate test cases for option combinations using Pairwise technique and for each combination generate one report for one email database type

2.4. Export messages to an external folder and export to EML: generate one report for each email database type

2.5. Export messages to an external folder and export to MHT: generate one report for each email database type

It would be very easy to review such a document.

The review of this document will allow us to find and correct the mistakes that appeared during the analysis of dependencies. After this document is reviewed, we can start writing complete test cases with steps.

Conclusion

Now after the test set was reduced, we can calculate the profit from the work done.

First of all, let’s calculate the number of tests without our optimization.

We have:

- 10 types of input data

- 3 types of output data

- 4 wizard pages and 31 option

If we were to create test cases to test each option with all 10 types of input data and all types of output data we would have the following results:

For the General options page:

- For the HTML option: 10 tests (create an HTML report for 10 types of input data).

- For the Text option: 10 tests (create a Text report for 10 types of input data).

- For the CSV option: 10 tests (create a CSV report for 10 types of input data).

- For the Destination folder option we test the saving of a report to a network folder, a not existing folder, a deeply nested folder, a folder with no access to it, etc. with all 10 types of input data and 3 types of output data. We have: 4 types of folders * 10 types of input data * 3 types of output data = 120 tests.

For the Disk recovery page: to test all 8 options with 2 types of input data and 3 types of output data we would require 48 tests.

And to test all possible combinations of these options with all input and output types of data we need: 28 combinations * 2 types of input data * 3 types of output data = 168 tests.

For the File recovery page:

- The testing of each option with 3 types of output data: 5 options * 3 types of output data = 15 tests.

- The testing of combinations of options: 10 combinations * 3 types of reports = 30 tests. 45 tests in total.

For the Email recovery page:

- Properties of included data: 12 options * 3 types of input data * 3 types of output data = 108 tests.

To test the combinations of these options we would require: 66 combinations * 3 types of input data * 3 types of reports = 594 tests.

- Include graphics from the attachments: to test this option we would require 9 tests.

- Export messages to an external folder and export to EML: 9 tests.

- Export messages to an external folder and export to MHT: 9 tests.

So to test all wizard options and their combinations we would require 1155 tests.

Even if you write all the 1155 tests and prepare complete test documentation with 100% of test cases covered, it is most unlikely that you will ever manage to perform all the tests.

Now let’s calculate the number of tests after our analysis and optimization:

For the General options page:

- For the HTML option: 3 tests (creation of one report for one type of data from each group).

- For the Text option: 3 tests (creation of one report for one type of data from each group).

- For the CSV option: 3 tests (creation of one report for one type of data from each group).

- For the Destination folder we test the saving of a report to a network folder, a not existing folder, a folder with deep nesting, a folder with no access to it, etc. with one type of input data and a report in one of the formats. We have 4 tests.

For the Disk recovery page:

To test 8 options with one type of input data and one type of output data we would require 8 tests.

Using the Pairwise technique to generate the combinations of options we get 7 combinations. To test these combinations we would require 7 tests.

For the File recovery page:

- Testing of each option with one type of report: 5 tests.

- Testing of option combinations using the Pairwise technique: 7 combinations with one type of a report = 7 tests.

12 test in total.

For the Email recovery page:

- Properties of included data: we would require 12 tests to test 12 options with one type of input data and one type of output data.

To test the combinations of these options: 10 combinations * 1 type of input file * 1 type of report = 10 tests.

- Include graphics from the attachments: we would require 1 test to test this option.

- Export messages to an external folder and export to EML: 3 tests.

- Export messages to an external folder and export to MHT: 3 tests.

To make the difference clearer, let’s look at the following table:

|

Option |

The number of tests without optimization |

The number of tests after optimization |

|

General options page –> HTML |

10 |

3 |

|

General options page –> Text |

10 |

3 |

|

General options page –> CSV |

10 |

3 |

|

General options page –> Destination folder |

120 |

4 |

|

8 option for Disk recovery page |

48 |

8 |

|

Combination of 8 options on the Disk recovery page |

168 |

7 |

|

5 options on the File recovery page |

15 |

5 |

|

Combinations of 5 options on the File recovery page |

45 |

7 |

|

12 options from the Properties of included data group on the Email recovery page |

108 |

12 |

|

Combinations of 12 options from the Properties of included data group on the Email recovery page |

594 |

10 |

|

Email recovery page–> Include graphics from the attachments |

9 |

1 |

|

Email recovery page–> Export messages to an external folder and export to EML |

9 |

3 |

|

Email recovery page–> Export messages to an external folder and export to MHT |

9 |

3 |

Altogether we have 69 tests instead of 1155. Of course these 69 tests do not guarantee to cover 100% of test cases. We inevitably miss something after reducing the test set. On the other hand, we lose significantly more when we have 1155 tests and do not perform all of them. To perform all 1155 tests we would require approximately 95 man-hours, while 69 tests require approximately 6. I.e. we spare time significantly without losing the most important test cases.

What’s next

Want to improve your testing process even more – consider using impact analysis in testing.

Read about using virtualization testing environments.

Learn our QA planning tips – How to do software testing estimation.