Docker is useful software that provides containers for pushing web services and applications to standardized development environments. Containers are often used by developers as they’re convenient tools for continuous integration and continuous development (CI and CD). But there are certain peculiarities of using Docker during the development lifecycle that developers should consider.

Contents:

Introduction

In this article, we describe scenarios where we apply Docker to handle CI and CD for a Django web service. We also recommend some tools and services that can help you integrate Docker into your development and deployment processes. Finally, we cover some pitfalls of Docker and some problems you may encounter when using it.

This article doesn’t explain the basic concepts behind Docker, but you can read about them here.

Using Docker when developing a web service

Docker is becoming increasingly popular among developers, so we decided to try it ourselves when we built a Django web application.

Before switching to Docker, we already had experience supporting the previous version of this web application. It had plenty of problems, however, that made the system fragile and the delivery process complicated. By switching to Docker, we wanted to:

- Eliminate differences in the configuration of development, staging, and production environments

- Overcome the lack of automated testing due to complicated environment settings

- Avoid long and painful deployments on production servers

- Simplify the process of changing the environment configuration and replicating the server in case of failure

Want to enhance your web application?

Let’s improve your product’s performance with the help of Apriorit’s professional web development teams.

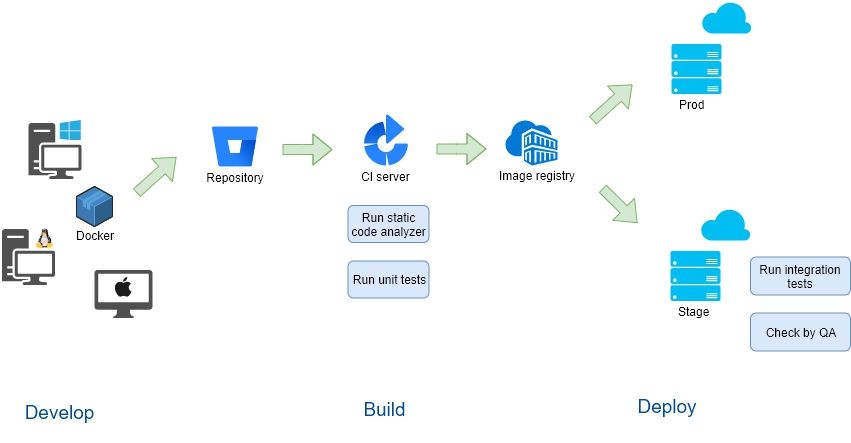

After integrating Docker, we managed to achieve these goals and were able to set up continuous integration and continuous development despite the application environment’s complexity. As a result, the workflow now looks like this:

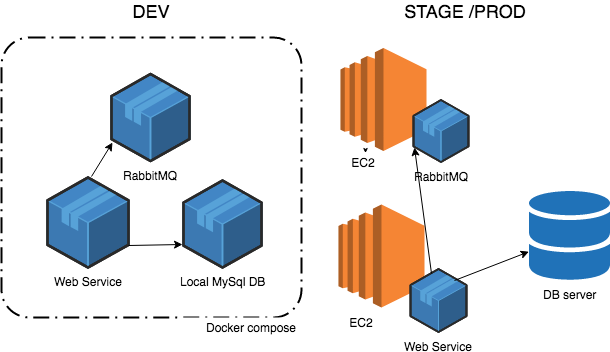

Environment unification

The main application of Docker is to package all environment settings into a Docker image. This simplifies the setup of different environments with the same configurations. Using Docker, developers can easily prepare their working environment, keep it up to date, and be sure that it remains the same as the production environment.

Though the overall infrastructure may differ between the local development environment and the staging and production environments, the main components are the same containers with the same configurations.

Easy replication of configuration changes

A Docker image preserves all environment settings including operating system settings, environment variables, and installed packages. Thus, it becomes easier to promote changes across all servers. To implement changes, we just need to update the Docker image and launch a container with the updated version.

Improved CI/CD

Thanks to simplified environment management, we were able to improve CI and CD by:

- setting up automated testing;

- simplifying and speeding up deployment processes.

To use Docker, we need to build an image of our service and deploy it with the following changes.

The build phase consists of the following steps:

- preparing sources;

- building a Docker image;

- running tests;

We had all the components in place as Docker containers, so we could run unit tests with the local database and a freshly built version of the web service as part of the build process.

- pushing the Docker image to the repository (Docker registry).

The deployment phase includes:

- triggering servers to pull new versions of the Docker image;

- replacing containers with the latest version from a new image;

- running auto tests to ensure that our web service is available and our API is responsive.

Related project

Building a Microservices SaaS Solution for Property Management

Our client wanted to enhance VAD platform security and performance by adding new capabilities to their platform and needed quality support for existing features.

Integrating Docker with development tools

Here’s an overview of some tools that can simplify the processes of building and deploying Docker images.

Development

PyCharm

PyCharm is an obvious tool for Python development, as it supports integration with Docker. PyCharm makes it possible to debug and run your code inside a Docker container right from your integrated development environment (IDE). This is extremely convenient as there’s no need to configure your working environment to start working with a project. All you need to do is set up your IDE.

Keep in mind, however, that only Pycharm Professional Edition supports Docker.

Docker Compose

Docker Compose can help you set up other infrastructure components on which your service depends locally, such as a database server. This tool will manage launching and interactions of all the containers in your system. PyCharm supports Docker Compose as a build and execution environment.

Building and storing Docker images

In order to use a Docker image, you need to build it somewhere and rebuild it from there in case of changes to the Dockerfile. There are several ways to do this:

Docker Hub

Docker Hub provides repositories for storing images. It also supports automated building of images whenever the Dockerfile changes. Built images are pushed to the attached repository. Repositories can be public or private according to the requirements.

AWS CodeBuild

AWS CodeBuild is an alternative service from Amazon for building source code, including Docker images. It’s integrated with Elastic Container Registry (ECR), which provides repositories for storing built images. This service is quite convenient, especially if your infrastructure is also hosted on Amazon.

Build server

Another option is to build the Docker image right on your own build server if it has enough capacity. This allows you to store some Docker cached files on the agent, which can speed up the build process. For comparison, neither CodeBuild nor DockerHub support Docker caching and instead always build an image from scratch.

However, you may still need a repository in order to store built Docker images and use them for deployment.

It’s also worth mentioning that the Docker image itself can be used as the built environment, for example in the following scenarios:

- The build agent has already pulled the Docker image with the configuration necessary for the project build (compiler and linker installed, third-party dependencies, etc.)

- A directory with sources is mounted to the Docker container and the binary is built inside the Docker container

Read also

Microservices and Container Security: 11 Best Practices

Discover how to protect your microservices infrastructure and ensure the integrity of your app with our comprehensive guide on container security!

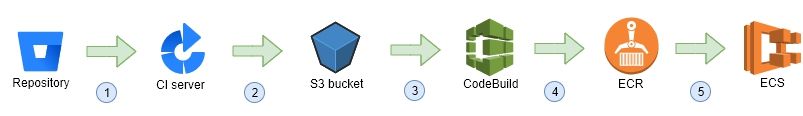

Deployment

Deploying a built Docker image means creating a new version of the image on the server instances and launching new containers based on the latest version. This process may look different depending on the infrastructure you use. However, there are services and platforms that can handle deployment of new images and launch containers with the specified configuration almost automatically – for instance, Giant Swarm, Digital Ocean, and Elastic Container Service (ECS). Here’s how this process can be performed with ECS:

- A build server is triggered when new changes are ready for testing

- The build server uploads source code to S3 to make it available for AWS CodeBuild

- CodeBuild downloads the source code and builds a Docker image

- CodeBuild pushes the built image to the ECS registry

- ECS fetches the new image and replaces the old task with the new one, which uses the new version of the image

Pitfalls of working with Docker

Platform specifics

Although Docker is intended to unify work with environments, there are some operating system-specific peculiarities to Docker’s behavior. Specifically, if you have a working project environment with Docker on Ubuntu, your team members may experience problems with it when using Docker for Windows or macOS.

The difference is that Docker for Ubuntu uses the kernel of the host system under the hood, and file system mounts are native. On the contrary, Docker for Windows and macOS runs inside a virtual machine that’s running Linux. Because of that, it’s not possible to mount the file system natively. It produces some difficulties due to the additional level of virtualization.

For both Windows and macOS, there are two versions of Docker available: Docker for Mac/Windows and Docker Toolbox. These are the differences between them:

- Docker for Mac/Windows is available only for newer versions of those operating systems: macOS 10.10.3 or higher and Windows 10 Pro/Enterprise. In contrast, Docker Toolbox works with earlier operating system versions too.

- Docker versions for Mac/Windows are more native apps, as they use built-in virtualization platforms (Hyperkit/Hyper-V). Docker Toolbox relies mostly on VirtualBox.

- Using Docker for Windows makes it impossible to use VirtualBox, VMWare, and any other hypervisors, as Hyper-V is turned on. The Docker version for macOS doesn’t have such restrictions.

- Docker Toolbox may have other problems with mounted volumes compared to the native versions.

Performance problems

Firstly, you should keep in mind that Docker may consume free disk space. Particularly, this relates to the development environment, where you need to rebuild the image repeatedly. This causes an accumulation of stopped containers and cached images. Finally, you may notice that other apps require more disk space to function. There are some useful commands that can help you clear some space:

- Delete stopped containers:

docker rm -v $(docker ps -a -q -f status=exited)- Delete images that aren’t being used:

docker rmi $(docker images -f "dangling=true" -q)- Delete unwanted volumes:

docker run -v /var/run/docker.sock:/var/run/docker.sock -v /var/lib/docker:/var/lib/docker --rm martin/docker-cleanup-volumesAnother issue you can face is with image build time. Build times may vary depending on the image size and number of instructions when building, but they can be a bottleneck for both CI/CD and local development. If you have a large image and building it takes more time than you expect, there are some ways to speed up the process:

- Use a multi-stage build

This feature is available from Docker 1.17 and allows you to break the initial Dockerfile into several stages that can be executed separately. The results of one stage can be shared with the other stages.

In this way, you can create separate stages for development and production, excluding everything unnecessary from the production image.

- Remove everything from the Dockerfile that’s unnecessary

Sometimes, it’s useful to review the Dockerfile and check whether all installed packages and environment changes are still necessary. By doing so, you can reduce the size of the image for production use.

- Use cache that has already pulled or built base images

The Docker cache includes everything that Docker has already built. That’s why the second build of an image is quicker than the first. You can improve the speed of your build by taking this into account.

Read also

Investigating Kubernetes from Inside

Explore how Kubernetes can enhance your web development projects, enabling you to deliver exceptional results while minimizing costs.

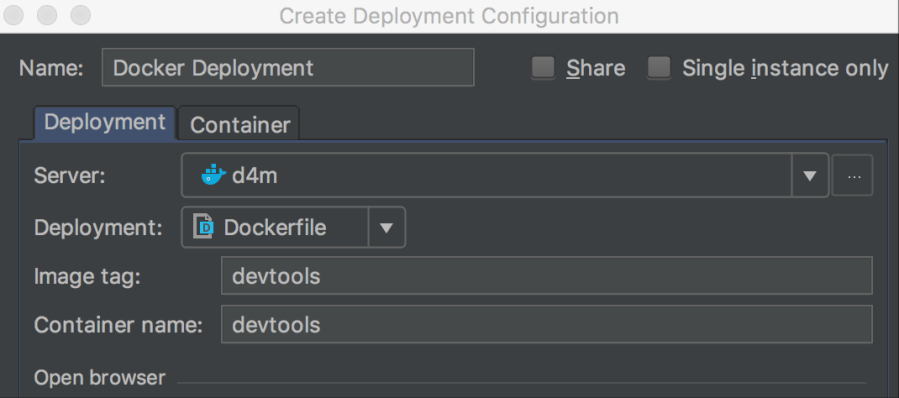

Setting up auto-reload in PyCharm using aiohttp devtools

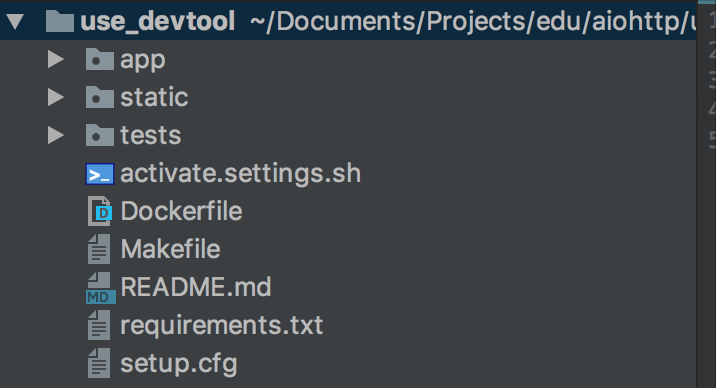

If you’re developing a web service in Python using aiohttp, you might experience problems with the absence of hot reload (auto reload) in PyCharm with Docker. Auto reload means that any changes you make to the source code while the service is running won’t be reflected until you restart the service. Django supports this feature (which is really convenient), and the same functionality can be accomplished using aiohttp devtools. Below is a guide on how to configure this feature with aiohttp devtools inside Docker.

Preconditions:

- macOS Sierra 10.12.5

- Docker version 17.06.0-ce, build 02c1d87

- PyCharm 2017.1.5

- Prepare the Dockerfile:

FROM python:latest

RUN pip install aiohttp-devtools

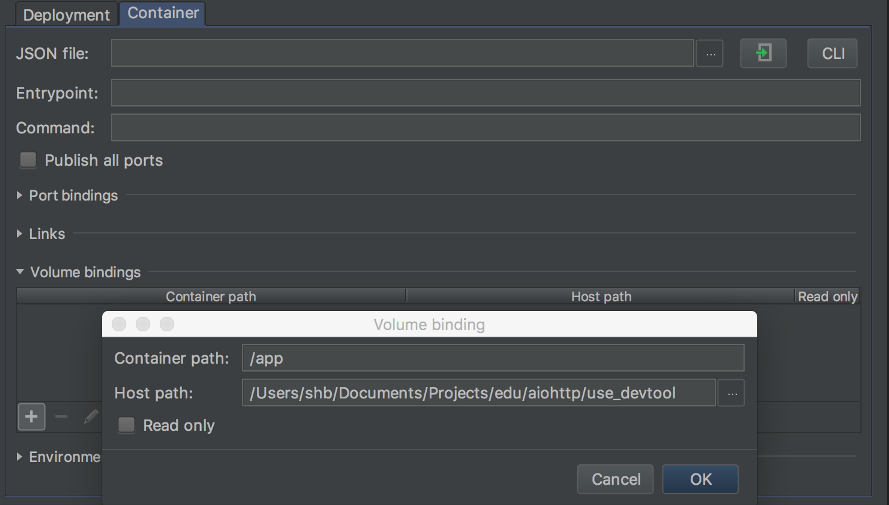

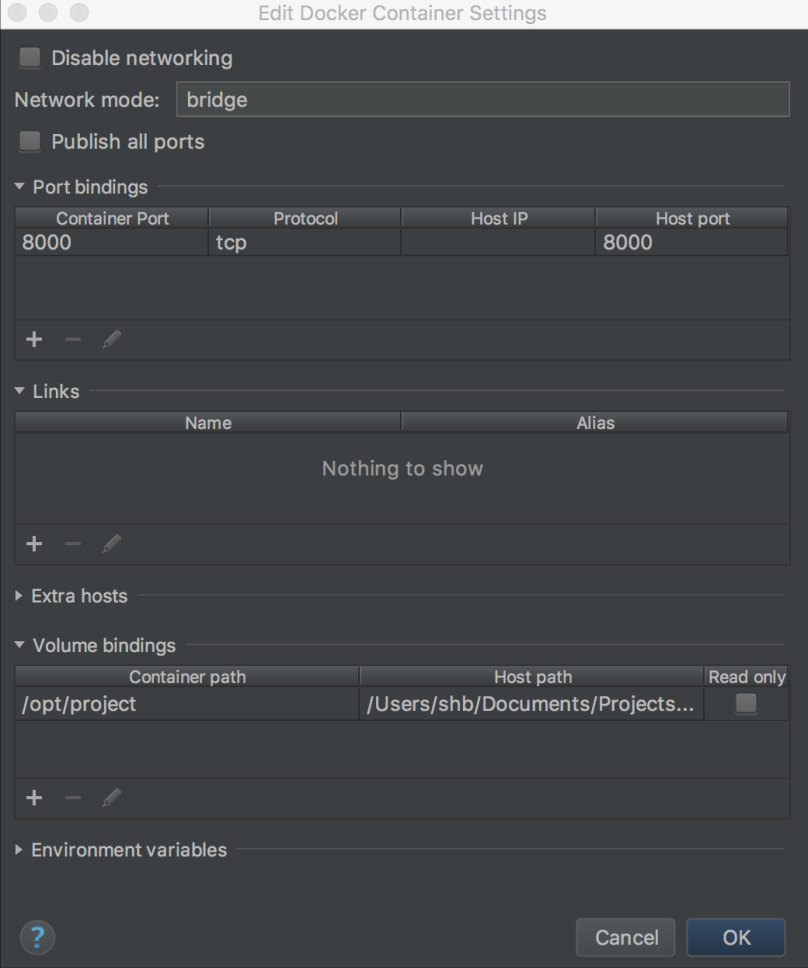

WORKDIR /app- Set up the Docker deployment in PyCharm:

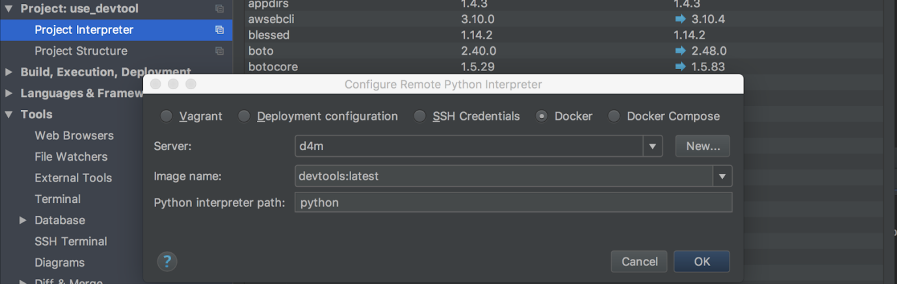

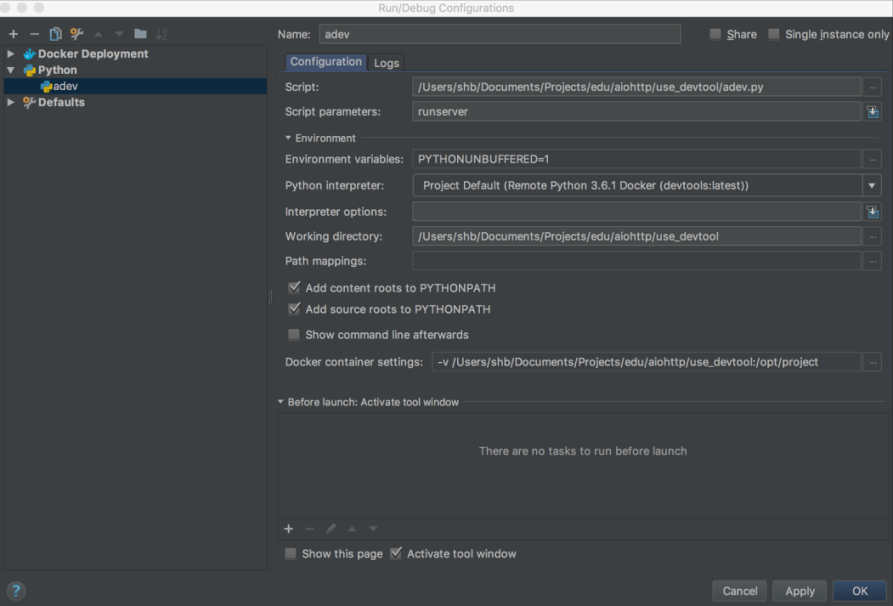

- Configure the Python interpreter:

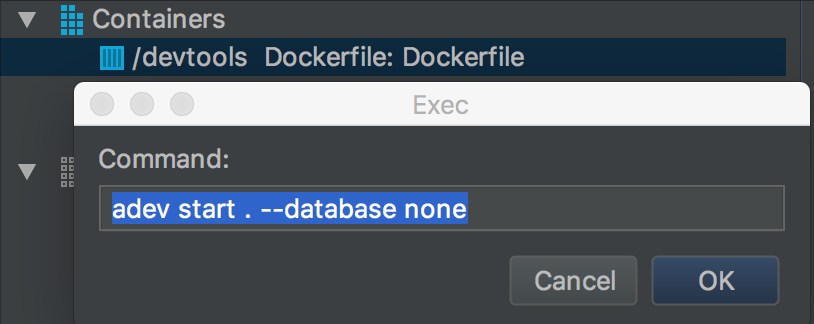

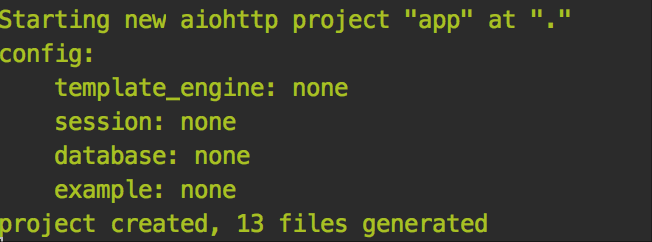

- Start a simple app:

- Extend the Dockerfile:

FROM python:latest

RUN pip install aiohttp-devtools

ENV AIO_APP_PATH "app/"

ENV AIO_STATIC_PATH="static/"

ADD requirements.txt .

RUN pip install -r requirements.txt

WORKDIR /app- Create adev.py:

Since there’s no way to run the command adev runserver, the workaround for this is to create your own custom startup script that instantiates the dev server.

from aiohttp_devtools.cli import cli

if __name__ == '__main__':

cli()- Set up Run/Debug configuration:

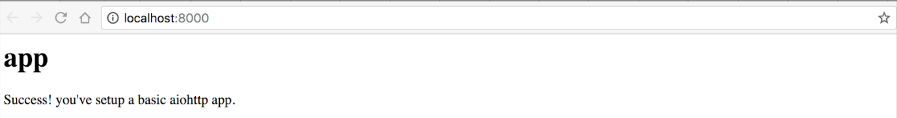

- Get results:

Note: this won’t work with Docker Toolbox because of problems related to using virtual machines.

Conclusion

Docker is a powerful and helpful tool that can make life easier when setting up continuous integration/continuous development for your web service. However, there are some pitfalls to using Docker that you should consider before development begins. With lots of experience supporting CI/CD processes, Apriorit would be glad to assist you with your Docker-based web application development.

Looking for web development experts?

Partner with Apriorit to elevate your online presence and create tailored solutions for your business growth!