Thanks to its quick, highly accurate, and context-aware responses, Generative Pre-trained Transformer 3 (GPT-3) has quickly become the main AI model for natural language processing (NLP) tasks. Getting started with GPT-3 is quick and simple, so it may seem like a must-have tool for any NLP project. However, GPT-3 has some limitations and disadvantages you need to be aware of before you start using it.

Let’s examine how GPT-3 works, the pros and cons of using it for building AI-powered solutions, and provide examples of using OpenAI’s GPT-3 with Python. We also discuss ways of fine-tuning GPT-3 for your particular tasks.

This post will be useful for development teams that are looking for a no-nonsense overview of GPT-3 and ways to use it in their generative AI development projects.

Contents:

What is so revolutionary about GPT-3?

GPT-3 is a pre-trained language processing model that uses deep learning algorithms to generate texts similar to those a human would write. Developed by OpenAI in 2020, GPT-3 has taken the AI world by storm with its impressive capabilities in language generation, translation, summarization, question answering, and more.

GPT-3 is focused on natural language processing (NLP), which is a subfield of AI that analyzes written data and enables communication between a human and a machine. In contrast to other NLP solutions, GPT-3 usually requires very little input from the user and no additional training to return a high-quality output.

For example, it can generate source code according to the parameters you request or analyze a bunch of texts based on given criteria. OpenAI achieved such results by training GPT-3 on terabytes of public resources including articles, posts, books, and code repositories, as well as by using generative models.

Generative NLP models can not only analyze pieces of text data and find similarities and differences but can also understand the relations between pieces of text data and create similar data. For example, when analyzing a user’s request, GPT-3 can understand the context and the relations between words and produce a human-like response that satisfies the request.

The algorithms behind GPT-3 aren’t publicly available, so we don’t know how it works from the inside and which elements it uses. OpenAI released a paid API for GPT-3 that developers can integrate into their products. The price for using this API depends on the GPT-3 model you choose for your project (we’ll take a look at them later in this article) and the number of words it generates.

Before we examine how to use GPT-3 in AI development, let’s take a look at the pros and cons of investing your time and money in working with this tool.

Pros and cons of using GPT-3 in software development

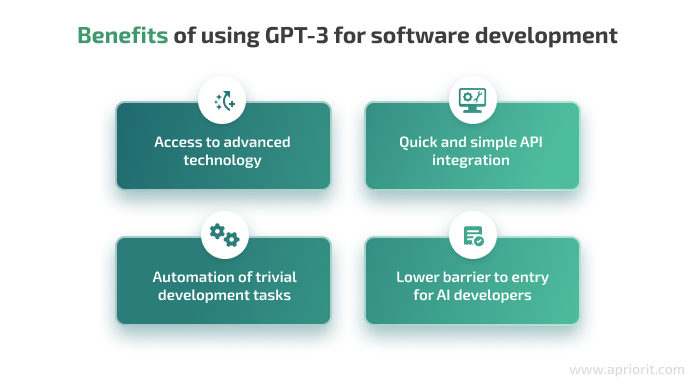

Like any cutting-edge and revolutionary technology, GPT-3 quickly became widely popular among software development companies. Here’s a list of benefits of using this language processing tool when developing your products:

Access to advanced technology. GPT-3 makes revolutionary natural language processing algorithms accessible to you even if you wouldn’t consider implementing and supporting such a large-scale and highly accurate solution on your own.

Quick and simple API integration. To start using GPT-3, you only need to install the corresponding library from OpenAI and run several commands in Python or another language of your choice. We’ll show you how to use GPT-3 in Python in the next section of this article.

Automation of trivial development tasks. GPT-3 can do trivial but time-consuming tasks instead of developers: generate documentation and testing data, research information on development issues, and even write source code.

Lower barrier to entry for AI developers. Installing and using GPT-3 doesn’t require specific AI development knowledge. You only need to be able to fine-tune the model and compose correct requests for GPT-3.

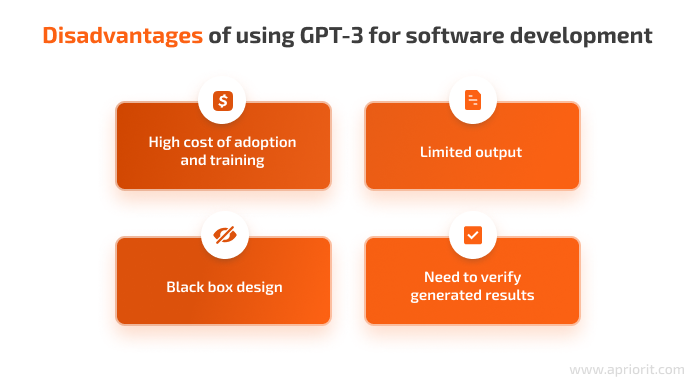

This tool, however, also has its downsides that you need to take into account before you decide to start using it. Some of the key cons of using GPT-3 include:

High cost of adoption and training. GPT-3 pricing depends on several parameters: the chosen model, the number of generated output tokens, and whether you are training it or using it in a real project. The final cost can stack up to be too high for individual developers and small businesses. Not to mention that for some NLP tasks, there are plenty of quality open-source NLP models.

Limited output. The most advanced GPT-3 model can’t compose a response of more than 4,000 tokens (around 3,000 English words) per request. In English texts, one token stands for four characters. Most models are limited to 2,048 tokens (around 1,500 English words). This limitation can be an obstacle if you intend to use GPT-3 for generating long texts, such as for code documentation.

Black box design. Since only the GPT-3 API is available to the public, it’s impossible to see how it works from the inside and make significant adjustments. You can only fine-tune GPT-3’s models in the way we’ll discuss at the end of this article.

Need to verify generated results. While GPT-3 produces high-quality human-like text and code, it still can contain errors, statistical and human biases, irrelevant or outdated information, plagiarism, etc. That’s why when building a commercial product, you need to manually review GPT-3 output before using it.

With these pros and cons of using GPT-3 in mind, let’s see how to integrate ChatGPT in AI projects.

Want to use AI in your next development project?

Let’s choose artificial intelligence technologies, models, and tools that will benefit you the most.

Setting up GPT-3

To start using GPT-3 for AI development, you’ll need to install OpenAI’s library. Here’s how to do it using Python:

pip install openaiAfter this, you’re ready to start using GPT-3 in Python projects. Let’s import the library and set up an API key, which you can get after registering on the OpenAI website.

Here’s an example of how to use OpenAI’s API for GPT-3 in Python:

import openai

# Use your OpenAI API key

openai.api_key = "YOUR_API_KEY_HERE"The API contains several parameters that affect GPT-3’s behavior and responses to your requests. Understanding these parameters and how they affect the behavior of GPT-3 can help you get the most out of this powerful tool. Let’s take a quick look at them.

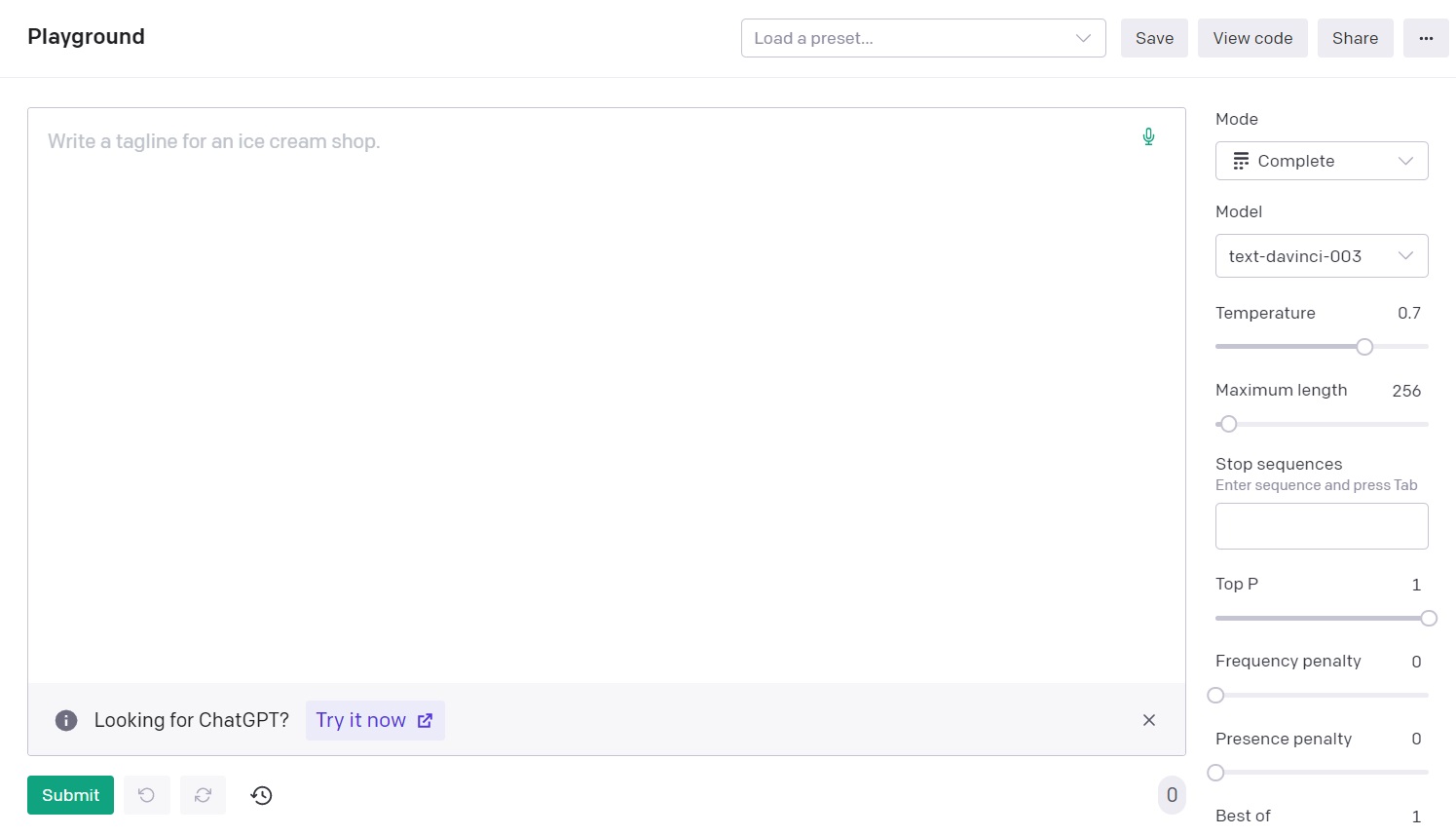

Configuring API parameters

You can adjust GPT-3’s parameters either when interacting with OpenAI’s GPT-3 playground or when writing code requests. Here’s a brief overview of some of the most important parameters:

engine. Choose which GPT-3 model to use to fulfill your request. Currently, GPT-3 provides four models: Ada, Babbage, Curie, and Davinci. We’ll overview them later in this article.temperature. Control the randomness of the text generated by GPT-3. A lower temperature results in a more predictable and conservative output, while a higher temperature returns a more diverse and creative output.n. Set the number of completions generated by GPT-3 for a given prompt. If you setn=1, GPT-3 will generate a single response. If you setn=3, GPT-3 will generate three completions, and so on.max_tokens. Limit the maximum number of tokens generated by GPT-3 for a given prompt. GPT-3 usage is priced by the number of tokens outputted in response to your prompts.stop. Set a string that will cause GPT-3 to stop generating text once it is encountered. For example, if you setstop="[STOP]", GPT-3 will stop generating text once it reaches the"[STOP]"string in its output.

By adjusting these and other parameters, you can customize the behavior of GPT-3 to meet the specific needs of your projects. Now we can overview how to use GPT-3 language models based on their differences.

Read also

Top LLM Use Cases for Business: Real-Life Examples and Adoption Considerations

Find out how you can benefit from large language models and what to consider before adopting them.

Choosing a GPT-3 model

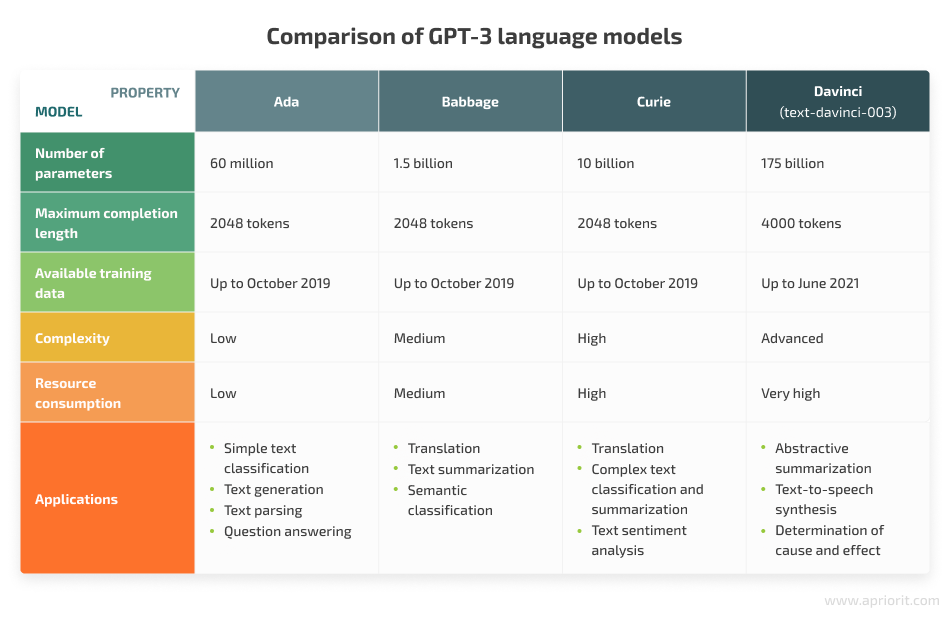

The GPT-3 language model family includes several models, each with its own strengths and weaknesses. Let’s compare their key properties and applications:

Ada is the best choice for machines with limited computational resources and simple NLP tasks.

Babbage is balanced in terms of task complexity and resource consumption, making it a good choice for products that don’t require extremely high accuracy.

Curie is a resource-demanding model that can handle a wide range of complex language-related tasks with high accuracy.

Davinci (the latest model of which is text-davinci-003) is the largest and most capable model in the GPT-3 family. Often called GPT-3,5, it’s the best choice for products that require the highest accuracy in challenging tasks and have the computational resources to support it. You can still use the previous models, text-davinci-001 and text-davinci-002, which are more affordable, but they provide outputs with lower accuracy.

The choice of a GPT-3 model depends on the specific task at hand, the resources available, and the level of accuracy required. In the next section, we overview some of the most common use cases for OpenAI’s GPT-3 with practical code examples written in Python.

Using GPT-3 for five real-life tasks

Unlike many NLP models, GPT-3 doesn’t have a specific application. With a correct prompt and enough computational power, it can perform any language-related task.

Here are the five key tasks you can accomplish with GPT-3:

Let’s take a look at how to use OpenAI’s GPT-3 for these tasks, as well as common types of software and services you can build with it and Python code examples. Note that we’ll use text-davinci-002 model.

Text generation

One of the most widely used features of GPT-3 is its ability to generate stories, descriptions, emails, questions, code samples, and more based on your request, which is one of the key LLM use cases. You can use it in a variety of AI-based projects:

- Different types of chatbots for websites and applications

- Virtual tutors on educational platforms

- Content creation and management systems

- Procedurally generated computer games

To generate text with GPT-3, all you need to do is pass a prompt. Here’s an example:

import openai

# Use your OpenAI API key

openai.api_key = "YOUR_API_KEY_HERE"

# The prompt for GPT-3

prompt = "Write a story about a magical kingdom"

# Generate text using GPT-3

text = openai.Completion.create(

engine="text-davinci-002",

prompt=prompt,

max_tokens=1024,

n=1,

stop=None,

temperature=0.5,

).choices[0].text

# Print the generated text

print(text)Here’s the output for this prompt:

Once upon a time, there was a magical kingdom named Azura, where magic and wonder filled the air. The kingdom was ruled by a wise and just queen, who was loved by all of her subjects. However, there was a curse that threatened the land. Every 100 years, a dragon would descend from the mountains and demand a tribute of the kingdom’s most precious treasure. If the treasure was not given, the dragon would breathe fire and destroy everything in its path. The queen was determined to break the curse and protect her people. She gathered the bravest knights and the wisest sorcerers in the kingdom and set out to defeat the dragon. They searched high and low, but no one knew how to defeat the dragon. One day, a young girl named Lily came to the queen with a strange, glowing stone. She said she had found it in the forest and believed it had magical powers. The queen took the stone and, with the help of her advisors, discovered that it was a piece of the dragon’s heart, shattered long ago by a great hero. The queen realized that if they could gather all the pieces of the dragon’s heart and reassemble it, they would have the power to defeat the dragon. She sent out a call to all the people of Azura, asking for their help in finding the missing pieces. Lily, who was brave and curious, decided to join the quest. She traveled far and wide, facing many dangers along the way. But she did not give up, and eventually, she found all the pieces of the dragon’s heart. The queen and her team reassembled the heart, and with its power, they defeated the dragon once and for all. The kingdom was saved, and the curse was broken. Lily was hailed as a hero, and the queen offered her a place at her side as her advisor. From that day on, peace and prosperity reigned in Azura, and the people lived happily ever after. And whenever they saw the bright, glowing stone that had once been a piece of the dragon’s heart, they remembered the bravery of Lily and the power of love and unity.

Read also

Anomaly Detection on Social Media: How to Build an AI-Powered SaaS Platform with Python Tools

Benefit from using AI to detect anomalies automatically, accurately, and in real time. Explore how such solutions work and how you can prepare for building one.

Text translation

At the time of writing, GPT-3 can translate among 95 languages. It takes into account grammar, correct sentence structure, various meanings of words, and the context of the text in the prompt to output accurate and human-like translations.

This can come in handy in a variety of AI projects where you need to translate text, such as:

- Translation and language learning services

- Multi-language chatbots

- Context-aware chatbots

- User support solutions

- Adapting your product for a new market

To indicate that GPT-3 has to translate your prompt to a certain language, add target_language to your code. The tool will automatically detect the language of your question.

For example, let’s ask GPT-3 to translate a French question into English:

import openai

# Use your OpenAI API key

openai.api_key = "YOUR_API_KEY_HERE"

# The text to be translated

text = "Bonjour, comment ça va?"

# The target language

target_language = "en"

# Translate the text using GPT-3

translation = openai.Translate.create(

engine="text-davinci-002",

text=text,

to_language=target_language

).choices[0].text

# Print the translated text

print(translation)The output for this prompt is:

Hello, how are you?

Text summarization

There are many cases when you may need to summarize large chunks of text into a smaller and more concise form. This is where GPT-3’s text summarization capabilities come in handy.

You can use GPT-3 for text summarization when you need to:

- Analyze user feedback

- Summarize educational materials for learning systems

- Summarize documentation for user support

- Parse text into spreadsheets

Here’s a practical example of summarizing a text with GPT-3:

import openai

# Use your OpenAI API key

openai.api_key = "YOUR_API_KEY_HERE"

# The text to be summarized

text = """

Photosynthesis is the process by which plants, algae, and some bacteria convert light energy into chemical energy in the form of glucose (a type of sugar). This process takes

place in the chloroplasts of plant cells and involves the absorption of light by pigments called chlorophyll. The energy from the absorbed light is then used to convert carbon

dioxide (CO2) and water (H2O) into glucose through a series of chemical reactions. The oxygen produced as a by-product of this process is released into the atmosphere, making

photosynthesis one of the primary sources of oxygen on Earth.

The overall chemical equation for photosynthesis can be written as: 6 CO2 + 6 H2O + light energy = C6H12O6 + 6 O2. In this equation, carbon dioxide and water are the reactants

and glucose and oxygen are the products. The process of photosynthesis can be divided into two stages: the light-dependent reactions and the light-independent reactions. The light-dependent reactions take place in the thylakoid membranes of the chloroplasts and involve the transfer of energy from light to the energy-rich molecule ATP. The light-

independent reactions, also known as the Calvin cycle, take place in the stroma of the chloroplasts and involve the use of the energy from ATP to synthesize glucose from CO2.

"""

# Summarize the text using GPT-3

summary = openai.Summarize.create(

engine="text-davinci-002",

text=text

).choices[0].text

# Print the summarized text

print(summary)And here’s the output for this prompt:

Photosynthesis is the process by which green plants, algae, and some bacteria convert light energy into chemical energy in the form of glucose. It involves the absorption of light by chlorophyll, the conversion of carbon dioxide and water into glucose through chemical reactions, and releases oxygen as a by-product. The process is divided into two stages: light-dependent reactions and light-independent reactions, involving energy transfer and glucose synthesis, respectively.

Read also

How to Develop Smart Chatbots Using Python: Examples of Developing AI- and ML-Driven Chatbots

Discover how to optimize your business with smart chatbots. Explore two ways of developing chatbots with Python tools.

Question answering

Another useful ability of GPT-3 is answering questions, taking into account their meaning and context. This can come in handy in a variety of AI-powered products, such as:

- Virtual assistants

- Search engines

- Knowledge base parsers

- Virtual tutors or learning platform chatbots

- Customer support services

Here’s how GPT-3 answers a question about the capital of France:

import openai

# Use your OpenAI API key

openai.api_key = "YOUR_API_KEY_HERE"

# The question to be answered

question = "What is the capital of France?"

# Answer the question using GPT-3

response = openai.Completion.create(

engine="text-davinci-002",

prompt=prompt,

max_tokens=1024,

n=1,

stop=None,

temperature=0.5,

).choices[0].text

# Print the response

print(response)The output is the following answer:

The capital of France is Paris.

Text classification

GPT-3 can classify texts based on its own parameters or ones you give it. For example, it can determine the language of a text in a prompt along with its sentiment and intent, analyze text based on your parameters, etc.

Text classification can be useful for:

- Sentiment analysis

- Topic and content classification and tagging

- Content and correspondence filtering

- Customer feedback analysis

- Spam filtering

Here’s an example of using GPT-3 for text classification in Python:

import openai

# Use your OpenAI API key

openai.api_key = "YOUR_API_KEY_HERE"

# The prompt to be answered

prompt = "Classify this tweet: 'I love spending time with my family and friends!'"

# Classify the tweet using GPT-3

predicted_class = openai.Completion.create(

engine="text-davinci-002",

prompt=prompt,

max_tokens=1024,

n=1,

stop=None,

temperature=0.5,

).choices[0].text

# Print the predicted class

print(predicted_class)The output will be:

Positive.

Note that we didn’t provide GPT-3 with categories for classification in our prompt, so it used categories it knows.

As you can see, GPT-3 has a variety of language-related applications out of the box. You can use GPT-3 as is or fine-tune its algorithms to adapt it to your particular task. Let’s see how you can do the latter.

Related project

Building an AI-based Healthcare Solution

Discover how Apriorit’s AI developers delivered a solution for ovarian follicle detection that works with 90% precision and a 97% recall rate.

Fine-tuning GPT-3: practical examples

GPT-3 generally provides users with accurate responses out of the box, but it may require additional fine-tuning. To decide whether it need to be tailored to your needs, you can first use GPT-3 in OpenAI’s playground.

There are two key ways to fine-tune the GPT-3 model:

Prompt design is the process of trying out different prompt versions to come up with a task prompt that allows you to get your desired output. For example, to train the model to generate descriptions for a certain product, you can write a prompt like Write a short description of the product: [product name]. GPT-3 will generate a description based on this prompt and the inputted product name.

It’s best to use prompt design for the following tasks:

- Text generation for simple and well-defined outputs (e.g. product descriptions, headlines)

- Text completion

- Text summarization

- Text correction

Fine-tuning is training the model on a massive set of data specific to the task. For example, to train GPT-3 to perform sentiment analysis, you can use a dataset of reviews and their corresponding sentiment labels. The model will learn to predict the sentiment of a given review.

Fine-tuning is more preferable than prompt design in the following tasks:

- Part-of-speech tagging

- Text classification

- Text generation for complex and varied outputs (e.g. poetry, creative writing)

- Question answering

Let’s take a look at two examples of the fine-tuning process: generating product descriptions and performing sentiment analysis.

To generate product descriptions, you need to train GPT-3 on product descriptions such as the following:

| Product name | Description |

|---|---|

| Apple iPhone 12 | The Apple iPhone 12 features a sleek design, an advanced camera system, and a powerful A14 Bionic chip. |

| Samsung Galaxy S21 | The Samsung Galaxy S21 boasts a large and vibrant display, improved camera system, and fast charging capabilities. |

With such data at hand, you can fine-tune GPT-3 using the OpenAI API and this Python code:

import openai

# Use your OpenAI API key

openai.api_key = "YOUR_API_KEY_HERE"

# Define fine-tuning settings

prompt = "Write a short description of the product: "

# Load the product descriptions dataset

data = []

with open("product_descriptions.txt", "r") as file:

for line in file:

product_name, description = line.strip().split(", ")

data.append((f"{prompt}{product_name}", description))

# Fine-tune the model

model = openai.Model("text-davinci-002")

model.finetune(data, stop=None, temperature=0.5)GPT-3 will analyze the given dataset and output descriptions relevant to these products.

To conduct accurate sentiment analysis, you need to train GPT-3 on a large number of reviews with sentiment labels. Here is an example of a data format for fine-tuning:

| Review | Sentiment |

|---|---|

| Apple iPhone 12 | The Apple iPhone 12 features a sleek design, advanced camera system, and powerful A14 Bionic chip. |

| Samsung Galaxy S21 | The Samsung Galaxy S21 boasts a large and vibrant display, improved camera system, and fast charging capabilities. |

Then, you can fine-tune GPT-3’s output by adding this database to your request:

import openai

# Use your OpenAI API key

openai.api_key = "YOUR_API_KEY_HERE"

# Define fine-tuning settings

prompt = "Classify the sentiment of the following review: "

# Load the sentiment analysis dataset

data = []

with open("sentiment_analysis.txt", "r") as file:

for line in file:

review, sentiment = line.strip().split(", ")

data.append((f"{prompt}{review}", sentiment))

# Fine-tune the model

model = openai.Model("text-davinci-002")

model.finetune(data, stop=None, temperature=0.5)In both examples, the model.finetune method is used to fine-tune GPT-3 on the given data. The fine-tuning process can be stopped manually by pressing Ctrl + C. Once the fine-tuning is complete, GPT-3 will generate new text or make predictions based on the new data.

Note that the choice between prompt design and fine-tuning depends not only on the task at hand but also on the available training data. If you need to fine-tune GPT-3 performance but don’t have enough data, prompt design may be your only option. But if you have a lot of training data, fine-tuning will allow you to achieve more accurate results than prompt design.

Conclusion

GPT-3 is an outstanding NLP model that can quickly generate human-like texts and keep them accurate, context-aware, and highly relevant to the prompt. Such capabilities suggest that you can use this AI model for a variety of language processing tasks, from content generation to AI chatbot development.

Since GPT-3 is a new and commercial tool, there are several disadvantages you need to take into account before working with it, however. First, it can be pricey and resource-hungry if you need to generate a lot of text. But most importantly, GPT-3 can’t be deeply customized to suit your needs and still needs human supervision, as it can make mistakes.

At Apriorit, we are excited to use this new tool to enhance AI projects. Our AI and ML development team can help you incorporate GPT-3 into your project in a way that maximizes its benefits and mitigates the downsides.

Looking for ways to benefit from AI technologies?

Apriorit experts will implement the most suitable AI and ML models in your product to make it efficient, performant, and competitive.