With the advent of AI, machine learning, and automation, computer vision becomes all the more relevant. At Apriorit, we build an expertise of working with computer vision as a part of working on a new set of projects involving AI and machine learning.

Now, we want to share our experience, specifically with regards to object detection with OpenCV.

Our objective is to count the number of people who have crossed an abstract line on-screen using computer vision with OpenCV library.

In this article, we will look at two ways to perform object recognition using OpenCV and compare them to each other. Both approaches have their own pros and cons, and we hope that this comparison will help you choose the best one for your task.

Contents:

Object Recognition with Machine Learning Algorithms

The first method for counting people in a video stream is to distinguish each individual object with the help of machine learning algorithms. For this purpose, the HOGDescriptor class has been implemented in OpenCV.

HOG (Histogram of Oriented Gradients) is a feature descriptor used in computer vision and image processing to detect objects. This technique is based on counting occurrences of gradient orientation in localized portions of an image.

HOGDescriptor implements a detector of histogram objects with oriented gradients. When utilizing HOG in object recognition, descriptors are classified based on supervised learning (support vector machines).

A Support Vector Machine, or SVM, is a supervised learning model that includes a set of associated learning algorithms. These algorithms are used for classification purposes. Coefficients calculated based on support vectors serve as the basis for classification. A set of coefficients should be calculated on the basis of training data (an XML file). This file contains complete information on a model/classifier.

Transform your ideas with AI-powered solutions

Apriorit’s custom development services offer the expertise needed to make your business stand out.

OpenCV includes two sets of pre-set nodes for people detection: Daimler People Detector and Default People Detector.

Here’s an example of how HOGDescriptor can be used (function interfaces can be found on the official OpenCV website):

cv::HOGDescriptor hog;

hog.setSVMDetector(cv::HOGDescriptor::getDefaultPeopleDetector());

// for every frame

std::vector<cv::Rect> detected;

hog.detectMultiScale(frame, detected, 0, cv::Size(8, 8), cv::Size(32, 32), 1.05f, 2);Our testing revealed that the effectiveness of recognition varies depending on the angle (top-view, side-view, profile-view). Side-view recognition is more unstable.

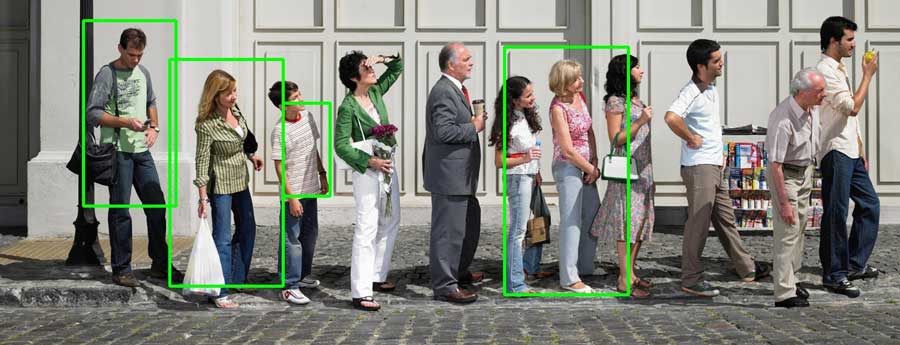

Figure 1. Top View Recognition

Figure 2. Side View Recognition

More accurate results can be obtained by modifying parameters of the detectMultiScale() function. Another advantage of this approach is that due to the learning ability, in some cases recognition can be improved to 90% or higher.

The next step is to recognize object movements. For more precise recognition, an object can be divided into parts (head, upper and lower torso, arms, and legs, for instance). In this way, the object will not be lost if it’s overlapped by another object. Body parts can be identified with the help of the HOGDescriptor, which contains a set of vertices for each body part. However, it has to be applied not to the whole image, but to a certain part with already identified object borders.

The disadvantage of this approach is that the algorithm slows down as the number of image vectors and their resolution increase. However, speed can be improved if all calculations are carried out using the video card’s compute capacity.

Read also

How to Fix Image Distortions Using the OpenCV Library

Ensure your image processing solutions deliver top-notch quality with our guide. Leverage our insights on working with OpenCV to get clearer, more accurate images for your applications.

Recognition of Moving Objects through Background Subtraction Algorithms

The second method for counting people in a video stream, which is more efficient, is based on recognition of moving objects. Considering the background to be static, two sequential frames are compared to identify differences. The simplest way is to compare two frames and generate a third one called a mask:

cv::absdiff(firstFrame, secondFrame, outputMaskFrame);However, as this method does not take into account such aspects as shades, reflections, position of light sources, or other changes to the environment, the mask might be quite inaccurate. For such cases, the following three algorithms for automatic background detection and subtraction are implemented in OpenCV: BackgroundSubtractorMOG, BackgroundSubtractorMOG2, and BackgroundSubtractorGMG.

Here, the BackgroundSubtractorMOG2 method is used as an example. This method uses a Gaussian mixture model to detect and subtract the background from an image:

auto subtractor = cv::createBackgroundSubtractorMOG2(500, 2, false);

//for every frame

subtractor->apply(currentFrame, foregroundFrame);For better recognition, any extra image noise that’s present in a video has to be removed, especially in videos that have been recorded in low light. In some cases, a Gaussian smoothing can be used to slightly blur an image before subtracting its background:

auto subtractor = cv::createBackgroundSubtractorMOG2(500, 2, false);

// for every frame

cv::GaussianBlur(currentFrame, currentFrame, cv::Size(10, 10), 0);

subtractor->apply(currentFrame, foregroundFrame);

cv::threshold(foregroundFrame, threshFrame, 10, 255.0f, CV_THRESH_BINARY);After image noise and background have been subtracted, the image is displayed in black and white, where the background is black and all moving objects are white.

Figure 3. BackgroundSubtractorMOG2 in use

In most cases, moving objects cannot be recognized completely and therefore have some empty space inside. To improve this, a triangle is created to cover the static space of an image. Then a morphological operation is performed to apply the triangle to the image so the empty space is filled:

// for every frame

cv::Mat structuringElement10x10 = cv::getStructuringElement(cv::MORPH_RECT, cv::Size(10, 10));

cv::morphologyEx(threshFrame, maskFrame, cv::MORPH_OPEN, structuringElement10x10);

cv::morphologyEx(maskFrame, maskFrame, cv::MORPH_CLOSE, structuringElement10x10);After that, the contours of all objects have to be identified and the boundaries of rectangles have to be saved to an array:

// for every frame

std::vector<std::vector<cv::Point>> contours;

cv::findContours(maskFrame.clone(), contours, cv::RETR_EXTERNAL, cv::CHAIN_APPROX_NONE);As objects can overlap each other, there are several OpenCV algorithms that identify pixels. One of them is based on the Lucas-Kanade method for optical flow estimation.

cv::TermCriteria termСrit(cv::TermCriteria::COUNT | cv::TermCriteria::EPS, 20, 0.03f);

// for every frame

cv::calcOpticalFlowPyrLK(prevFrame, nextFrame, pointsToTrack, trackPointsNextPosition, status, err, winSize, 3, termСrit, 0, 0.001f);The Lucas-Kanade method estimates the optical flow for a sparse set of functions. OpenCV also provides the Farneback method, which allows searching for a solid optical flow. This method estimates the optical flow for all pixels in the frame.

cv::calcOpticalFlowFarneback(firstFrame, secondFrame, resultFrame, 0.4f, 1, 12, 2, 8, 1.2f, 0);In addition, OpenCV has the following five algorithms for object tracing with automatic detection of the contours of moving objects: MIL, BOOSTING, MEDIANFLOW, TLD, and KCF. All of them are implemented for the cv::Tracker class:

cv::Ptr<cv::Tracker> tracker = cv::Tracker::create("KCF");

tracker->init(frame, trackObjectRect);

// for every frame

tracker->update(frame, trackObjectRect);As a result, each object is boxed in a rectangle. OpenCV traces whether this rectangle crosses an abstract line. In this way, the number of passers-by is counted.

Figure 4. System for Passers-by Counting in Operation

To filter out small objects and to detect nearby moving objects, maximum and minimum width can be defined.

The method described above works best in good lighting and when there’s considerable distance between the camera and an object. At the same time, recognition can be improved by defining object width at a given point on the plane, as sometimes a weakly recognized object can be perceived as multiple objects.

This algorithm can also be used for recognizing other moving objects such as cars.

Related project

Developing an Advanced AI Language Tutor for an Educational Service

Explore our success story of building an advanced language tutor for our client. With the integration of natural conversation capabilities and mistake correction, we managed to create an AI-powered chatbot that enhances interactive learning and scales effectively.

Conclusion

In this article, we provided an OpenCV object detection example using two different approaches: machine learning and background subtraction algorithm. Neither of these methods resolve the issue of how to trace objects that move into invisible sectors. A possible solution might be an algorithm that can predict the trajectory of movement depending on the speed of an object.

To sum up, there are two main methods for counting the number of people in a video stream, each of which has its advantages and disadvantages. In this article, we’ve only provided basic information on these methods, as there’s no one-size-fits-all solution in available open source computer vision libraries.

Take your business to the next level with AI

Our custom AI development services are designed to empower your business and lead the market with smart, scalable solutions.