Key takeaways:

- AI chatbots are powerful tools, but they introduce distinct security risks like prompt injection, data leaks, and supply chain vulnerabilities.

- Secure chatbot development requires proactive practices, including adversarial testing, privacy-focused engineering, and role-based access controls.

- Partnering with experienced cybersecurity and AI development teams is crucial if you want to build a secure AI chatbot that protects users, data, and business systems.

As companies increasingly rely on AI-driven assistants, one crucial question arises: Are AI chatbots truly secure? The answer is not a simple yes or no. While AI chatbots offer immense potential, they also present a unique set of security challenges.

In this article, we explore the most pressing cybersecurity threats AI chatbots face today, what risks they bring to your business, and practical strategies to mitigate these risks from the very beginning of development.

This article will be useful for tech leaders who are looking into secure AI chatbot development or who want to strengthen the security of an existing chatbot.

Contents:

Can AI chatbots be secure by default?

AI chatbots handle vast amounts of sensitive information, from customer requests and employee inquiries to internal business data. These chatbots are deeply integrated into critical business systems, making them a valuable tool.

But are smart chatbots secure by default? While they are supposed to be, as their role expands, so does their appeal to cybercriminals. For example, a recent hack of an AI chatbot forced a delivery company to take down their chatbot feature.

Attackers see AI chatbots as valuable targets for several reasons:

- Access to sensitive data. Many chatbots interact with personal, financial, or business-related information that can be exposed as a result of a security breach.

- Vulnerable AI models. AI-driven chatbots operate on complex models that can be manipulated through adversarial inputs.

- Hard-to-predict vulnerabilities. Many off-the-shelf AI chatbots operate as black-box models, making it difficult to spot and fix security weaknesses before attackers exploit them. Businesses may not realize a chatbot is leaking sensitive data until real damage occurs.

- Evolving AI behavior. Since smart chatbots learn and adapt, detecting suspicious activity isn’t always straightforward like with rule-based systems. Attackers can manipulate AI learning mechanisms to introduce biases or security gaps that traditional monitoring tools might miss.

- Gateway to business infrastructure. AI chatbots can be integrated with databases, APIs, and internal business systems, serving as entry points for deeper network infiltration.

These factors make AI chatbots a prime target for cyber threats, but with the right security measures, they can be effectively protected.

Looking to integrate an AI chatbot into your business?

We develop smart and scalable AI chatbots that enhance customer service, automate workflows, and boost engagement. Let’s create a custom chatbot that’s right for you!

What are the consequences of using unsafe AI chatbots?

Customers and business users increasingly interact with AI-driven solutions, but their trust depends on how secure and reliable these systems are. If a chatbot mishandles sensitive information or is exploited by cybercriminals, it can undermine confidence in your brand.

What are the possible consequences of unaddressed security issues for your AI chatbot?

- Loss of business data and intellectual property with third-party chatbots or those that are used internally within your company

- Financial losses resulting from incident response, non-compliance fines, and recovery efforts

- Reputational damage, as customers may lose trust in your company if their personal data is leaked or if a chatbot provides harmful or misleading responses

- Legal liabilities and compliance risks, since a chatbot security breach could lead to non-compliance with the GDPR, HIPAA, CCPA, PCI-DSS, and more

Building secure AI interactions isn’t just about mitigating risks — it’s about ensuring trust and reliability. A well-secured chatbot not only protects user data and interactions but also reinforces your business’s commitment to security. To effectively prevent potential threats, it’s important to understand the specific risks associated with AI chatbots and how to address them.

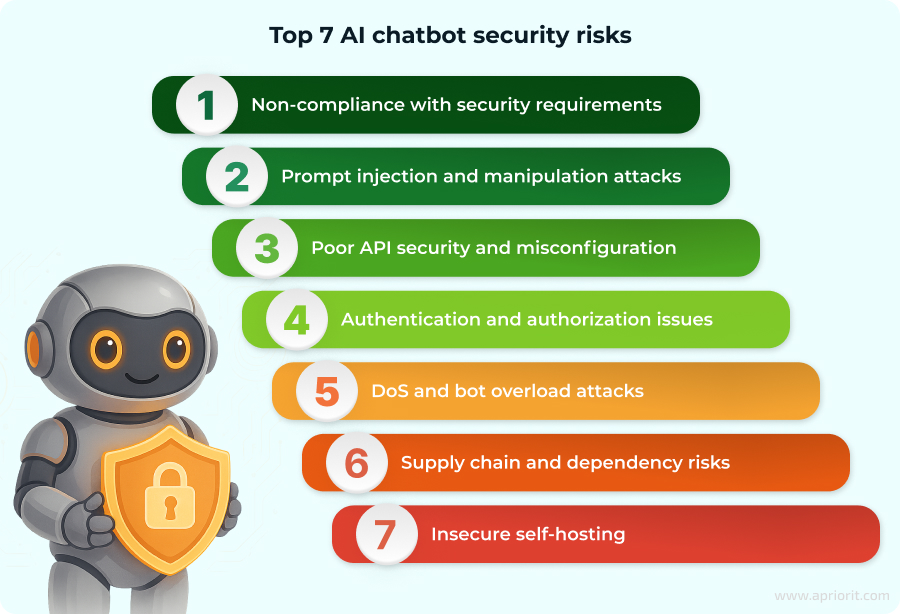

Top 7 critical AI chatbot security risks every business should know

AI chatbots bring efficiency and automation to business operations, but off-the-shelf solutions often come with significant security risks. Here are the most critical security risks that your development team needs to address before implementing a secure AI chatbot into your workflow.

1. Non-compliance with security requirements. AI chatbots handle sensitive user information, including personal data, financial details, and proprietary business insights. Inadequate security measures — such as weak encryption, lack of proper data anonymization, improper access controls, or poor data retention policies — could lead to data exposure.

2. Prompt injection and manipulation attacks. Attackers can manipulate chatbot inputs to bypass security restrictions, extract sensitive information, or alter chatbot behavior. Since many chatbots rely on natural language processing models that lack contextual awareness, they can be easily manipulated through adversarial inputs. For example, carefully crafted prompts can trick AI models into revealing confidential data or executing unauthorized actions.

3. Poor API security and misconfiguration. Many off-the-shelf AI chatbots come with pre-integrated APIs to connect with various external services, such as payment gateways, messaging platforms, and customer support tools. While this built-in connectivity makes deployment easier, it may also introduce additional security risks. Poorly secured or configured third-party APIs can become a direct entry point for attackers.

4. Authentication and authorization issues. Since ready-made chatbot solutions often lack adequate access controls, they are extremely vulnerable to unauthorized modifications. If an attacker gains access to the chatbot’s configuration settings, they can manipulate responses, inject harmful content, or even redirect users to fraudulent sites.

5. DoS and bot overload attacks. Chatbots can become targets for denial-of-service attacks, where malicious actors flood them with excessive requests to degrade performance or cause service outages. Automated bots can continuously query chatbots to consume processing resources, which means downtime or increased operational costs for your business. For cloud-based chatbots, this can lead to significant financial consequences due to unplanned scaling and resource consumption.

6. Supply chain and dependency risks. AI chatbots often depend on third-party models, libraries, and integrations, many of which have their own known and undiscovered vulnerabilities. If a chatbot relies on an outdated or compromised third-party component, attackers can exploit these weaknesses to gain access to the system. Unlike API attacks, which primarily target communication channels, supply chain vulnerabilities compromise the chatbot’s foundational code before it even goes live, as well as its core components and dependencies.

7. Insecure self-hosting. Self-hosting can be a viable option when you have concerns about trusting third-party hosting providers or when you require the highest level of security assurance. However, critical mistakes in a self-hosting configuration can lead to various risks like insider threats, data breaches, downtime, and data loss.

It can be difficult to guarantee that off-the-shelf solutions you integrate into your software have critical security controls to prevent these AI chatbot security risks. However, it’s still possible to develop your own AI chatbot with built-in security, compliance, and access control measures, or to integrate a ready-made chatbot safely. This will reduce risks and give you full control over data protection. Let’s see how your teams can proactively mitigate these threats and build a well-protected AI chatbot.

Read also

How AI Chatbots Can Transform Your ERP System

Looking to make your ERP system smarter? Discover how AI-driven chatbots can automate routine tasks, provide instant access to critical data, and improve workflow efficiency across your organization..

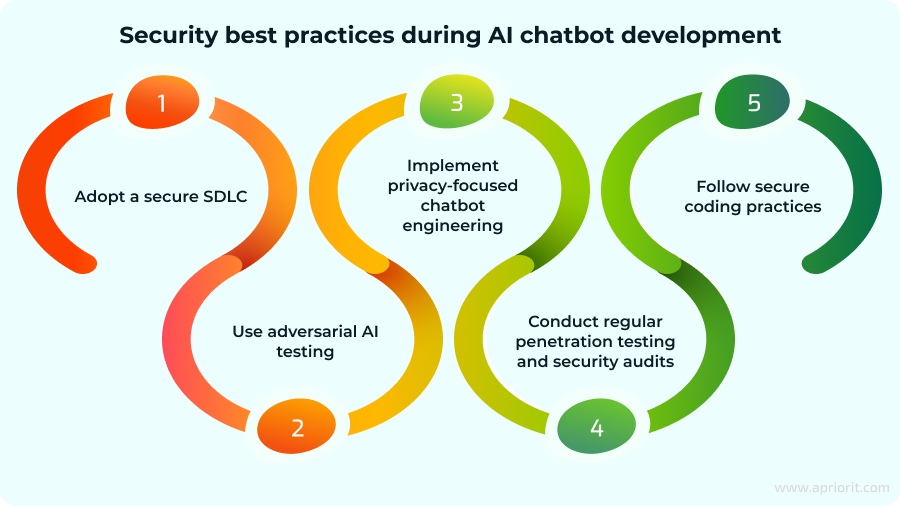

Security best practices during chatbot development

Building a secure smart chatbot requires more than just adding security features. It also demands a privacy-first approach, ethical engineering principles, and efficient development practices. Here are some of the chatbot security best practices that Apriorit developers use to avoid vulnerabilities and ensure compliance of AI chatbots.

Adopt a secure SDLC. Security should be embedded into every phase of chatbot development, from design to deployment. A secure software development lifecycle will help you establish security as a top priority from the outset. This development model can include:

- Threat modeling to identify risks before development begins

- Secure design principles to prevent common AI and software vulnerabilities, like the principle of least privilege

- Security testing integrated into CI/CD pipelines

- Continuous monitoring for real-time threat detection

Use adversarial AI testing. This method can help test the resilience of AI chatbots against more sophisticated attacks. Adversarial AI testing focuses on how an AI chatbot reacts to manipulated inputs and explores potential AI-specific threats. Unlike traditional penetration testing, this method deliberately feeds deceptive, misleading, or harmful data into the chatbot model to assess its robustness.

Implement privacy-focused chatbot engineering. AI chatbots often process sensitive business and customer data in training and after deployment. As we develop privacy-focused chatbots, we also implement:

- Data minimization to collect only the necessary information required for the chatbot’s functionality. Automated data filtering and context-aware data masking can help prevent the storage of personally identifiable information beyond what is strictly necessary.

- End-to-end encryption to protect user interactions and stored data from interception with the help of protocols such as AES-256 for storage and TLS 1.3 for data transmission. Even if encrypted data is intercepted, it remains unreadable to unauthorized entities.

- Anonymization and pseudonymization to reduce the risk of personal data and user identity exposure with the help of irreversible techniques like differential privacy and k-anonymity. Pseudonymization can replace sensitive identifiers with encrypted or tokenized alternatives, minimizing the risk of data breaches.

- Transparent AI models with the help of explainable AI (XAI) techniques to minimize the use of black-box scenarios. These include model interpretability frameworks like LIME and SHAP, which allow for auditing and monitoring of chatbot decisions.

Conduct regular penetration testing and security audits. This proactive approach to security is important for identifying and mitigating vulnerabilities before attackers can exploit them. Since you need to conduct these audits throughout the chatbot’s lifecycle, you can engage third-party security experts who will provide an independent assessment. Security audits help you assess risks related to:

- API security and misconfigurations

- Data leakage and non-compliance risks

- Weak authentication and authorization mechanisms

Red teaming is a continuation of penetration testing, as it involves ethical hacking teams that act as attackers to find weaknesses in a smart chatbot’s design, training data, and operational security. This approach mimics real-world scenarios, such as unauthorized access attempts, data exfiltration techniques, or security bypass methods. Your teams can use both static and dynamic code analysis tools to identify potential vulnerabilities in the codebase.

Follow secure coding practices. These practices are fundamental, as our developers use them for building not only secure chatbots but resilient software in general. You can integrate security into every stage of chatbot development by using well-established methodologies, including those outlined in the NIST Secure Software Development Framework (SSDF) and ISO/IEC 27001.

Here are some of the practices that we constantly use:

- Input validation to block malicious payloads

- Secure API calls with proper authentication and encryption

- Sandbox chatbot environments to limit damage from potential exploits

- Code obfuscation and integrity checks to protect against unauthorized reverse engineering attempts

We also recommend regular updates of AI libraries, frameworks, and dependencies. This helps you mitigate security risks and ensure protection against newly discovered vulnerabilities.

Related project

Building AI Text Processing Modules for a Content Management Platform

Explore the success story of a client who collaborated with us on AI text transformer modules to boost efficiency, ensure scalability, and improve the accuracy of automated text analysis solutions.

How Apriorit can help with AI chatbot security

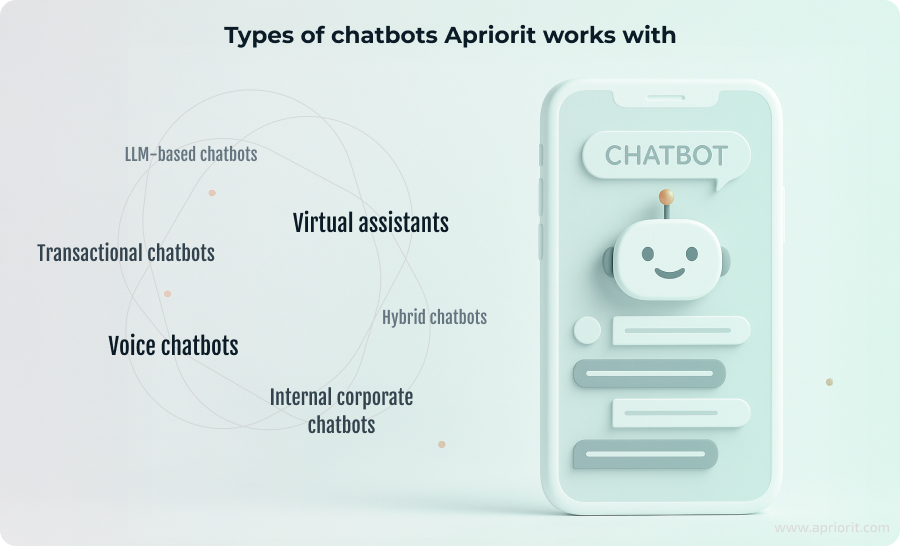

No matter the complexity of your AI chatbot solution, our experts are ready to help you build a secure, reliable, and compliant AI-driven system. Our expertise in AI, chatbot architecture, and cybersecurity allows us to identify and mitigate risks that can potentially arise with your chatbots.

We have extensive experience working with AI-powered solutions and chatbot technologies. Our team can assist you with:

- Developing AI chatbots for industry-specific needs. We helped an eLearning solution provider build an AI-powered language tutor, ensuring secure data processing while delivering personalized learning experiences.

- Improving chatbot efficiency in high-demand environments. Our experts built an AI-powered chatbot for an EV charging network, streamlining customer support while maintaining strong security measures to protect user transactions and system integrity.

- Securing AI chatbot integrations with enterprise systems. We can design and implement a context-aware AI chatbot with advanced data security features, allowing businesses to integrate AI-driven assistants without exposing sensitive information.

- Enhance AI chatbot security against prompt injection attacks. Our experts can implement prompt injection protection for LLM-powered chatbots, guaranteeing secure user interactions and preventing malicious inputs from manipulating AI behavior.

When you partner with Apriorit, you can mitigate cybersecurity risks, protect sensitive business data, and enhance customer trust in AI-driven interactions.

Conclusion

AI chatbots offer lots of value for businesses, as they help improve customer engagement, automate workflows, and streamline operations. At the same time, security remains a critical concern, since vulnerabilities can lead to data breaches, compliance violations, and reputational damage.

Achieving a secure, reliable, and compliant AI chatbot isn’t easy, and it demands specific expertise. Apriorit’s experienced team can help you build a custom chatbot that not only meets your business goals but also keeps your data and systems secure.

Boost efficiency with an AI-powered chatbot!

Let’s work together to develop a chatbot solution that’s both intelligent and resilient against cyber threats.