Key takeaways:

- Fragmented and inconsistent telemetry makes it difficult to correlate data and integrate cybersecurity tools effectively.

- The Open Cybersecurity Schema Framework (OCSF) provides a vendor-agnostic standard for unifying event data across platforms.

- Adopting OCSF improves analytics accuracy, streamlines compliance, and simplifies SIEM, SOAR, and XDR integrations.

- Successful OCSF implementation is impossible without clear data mapping, modular design, and experienced engineering support.

Today, cybersecurity providers face a growing challenge: data fragmentation.

Whatever product your team is working on — from SIEM and SOAR to endpoint and cloud monitoring platforms — it must operate seamlessly within complex environments that include dozens of other tools and data sources. Yet, these systems often speak different languages when it comes to event data.

The result is fragmented and poorly aligned telemetry that slows down correlation, detection, and integration efforts.

The Open Cybersecurity Schema Framework (OCSF) introduces a way to overcome this fragmentation. OCSF provides a common standard for structuring and exchanging security event data and supports interoperability across products and vendors. Instead of building custom connectors or translation layers, development teams can rely on a shared schema that strengthens the entire security ecosystem.

This article is for cybersecurity product leaders, architects, and data engineers who aim to:

- Standardize telemetry models across platforms to reduce integration complexity

- Unlock more accurate, scalable threat detection and analytics within multi-tool environments

In this article, our experts explore the OCSF meaning, why the framework matters for cybersecurity vendors, and its practical benefits and challenges. They also explain how Apriorit can help you adopt OCSF effectively.

Contents:

What is the Open Cybersecurity Schema Framework (OCSF)?

In modern cybersecurity ecosystems, every product generates its own event data, often using unique naming conventions, formats, and field structures. This fragmentation makes it difficult for vendors to build integrations and correlate data efficiently across tools. The Open Cybersecurity Schema Framework (OCSF) was created to solve that problem.

OCSF is an open-source, vendor-agnostic data schema designed to bring consistency to how cybersecurity events are represented, stored, and exchanged. It was initially developed by AWS and Splunk and is now supported by a growing number of companies.

At its core, OCSF defines a structured and extensible event taxonomy that can be applied to data coming from any of these sources:

- Networks

- Endpoints

- Identities

- Cloud platforms

Instead of inventing a new format for every integration, vendors can rely on the OCSF format and its shared vocabulary to describe events in a consistent way.

OCSF isn’t the first attempt to standardize cybersecurity data exchange. Frameworks like STIX, OpenDXL, and Elastic Common Schema (ECS) are also designed to improve interoperability. However, OCSF stands out due to its simplicity, extensibility, and neutral governance. It focuses purely on data representation, leaving transport and orchestration to other tools. This, in turn, makes it easier for teams to integrate OCSF into existing products without major redesigns.

Is your software ready to face today’s evolving cyber threats?

Apriorit helps you achieve full protection with expert cybersecurity audits, vulnerability assessments, and tailored defense strategies that align with your business goals.

Core components of OCSF

Though the framework is still evolving, OCSF’s current architecture revolves around three main components:

- Taxonomy is the hierarchical structure that organizes cybersecurity events into categories and classes.

- Data types define how individual data fields (for example, user, process, file, and IP address) are formatted and related.

- Attribute dictionary is a centralized reference that describes all attributes used in the schema, such as their meaning, data type, and expected usage.

Together, these elements create the backbone of OCSF. Vendors can extend the schema to cover proprietary or domain-specific data and maintain alignment with the shared standard.

OCSF defines how cybersecurity data should be structured, but its real value can be seen when it’s applied in practice. Let’s take a look at why OCSF has become strategically important for building modern data-driven products.

Read also

Telemetry in Cybersecurity: Improve Security Monitoring with Telemetry Data Collection (+ Code Examples)

Strengthen your cybersecurity defenses with smarter data use. Explore how collecting telemetry data enables real-time threat detection, faster responses, and a deeper understanding of system behavior.

Why OCSF matters for cybersecurity vendors

You should view adopting OCSF as a strategic move if you want to stay relevant in an increasingly connected security ecosystem. OCSF on its own doesn’t replace your core detection logic or unique value proposition. Instead, it defines how your data interacts with the rest of the ecosystem, and that interaction is now critical for product success.

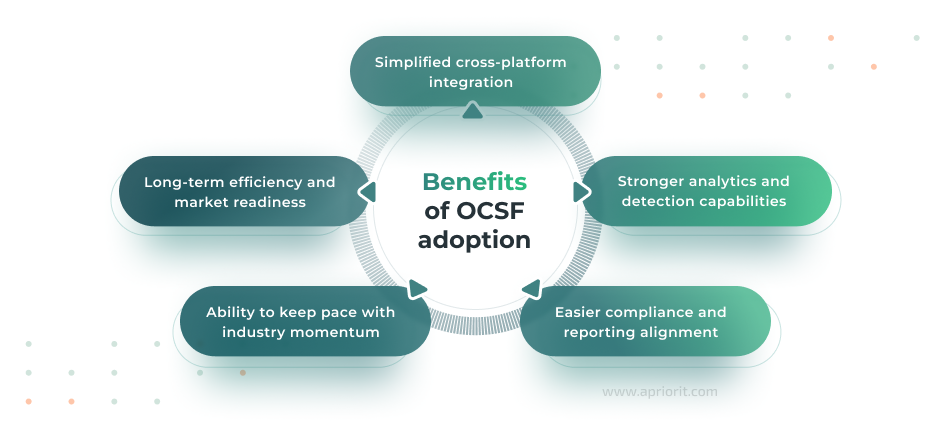

Let’s explore each benefit in detail.

- Simplified cross-platform integration

Every cybersecurity product today must integrate with dozens of others, including SIEM, SOAR, XDR, and analytics tools. Each integration typically requires custom mapping, parsers, and maintenance effort. By aligning your event structures with OCSF, you will dramatically simplify integration work and reduce engineering friction when onboarding new partners or enterprise customers.

As a result, you can position your products as OCSF-ready, improve compatibility across multiple platforms, and make it easier for clients to plug your products into existing security stacks.

OCSF also acts as a shared data language that enables smooth interoperability between tools such as endpoint agents, network monitoring services, and threat intelligence platforms without requiring custom-built connectors.

- Stronger analytics and detection capabilities

OCSF helps you unlock more value from your data. Unified event formats make it easier to build and train machine learning models for threat detection, correlate events from different telemetry sources, and share enriched findings between systems. This means less time spent cleaning and transforming data and more time spent improving detection accuracy and response speed.

With normalized telemetry across cloud, endpoint, and network sources, your detection logic gains contextual depth, reducing false positives and shortening investigation cycles. For those who build AI- or analytics-driven solutions, adopting OCSF can significantly reduce the complexity of developing cross-domain detection logic.

- Easier compliance and reporting

Many security products need to map their event data to compliance frameworks like the NIST Cybersecurity Framework (CSF), ISO 27001, or SOC 2. With OCSF, you will benefit from a consistent data model that simplifies mapping between schema fields and compliance reporting templates.

While this doesn’t eliminate compliance work, it provides a stable and well-structured foundation that simplifies auditing, validation, and customer reporting. OCSF also simplifies the creation of long-term security data stores (such as data lakes) by enforcing a consistent schema that makes historical and real-time datasets easier to analyze and validate for compliance.

- Keeping pace with industry momentum

OCSF may not be mandatory just yet, but it’s gaining traction across the industry. Since its launch, OCSF has received strong backing from key industry vendors, including AWS, Splunk, IBM, and Palo Alto Networks. This growing participation shows that OCSF is quickly becoming a de facto standard for cross-platform interoperability.

For product vendors, this means future integrations and partnerships will increasingly expect compliance with the OCSF model. By joining this ecosystem early, you can position yourself as a collaborative and forward-looking partner who is capable of integrating seamlessly with the modern cybersecurity stack.

- Long-term efficiency and market readiness

For mature products, migrating to OCSF isn’t about immediate cost reduction but about strategic alignment. Products with OCSF will likely have faster sales cycles and fewer integration blockers.

When used as the foundation for security data lakes and enterprise-scale analytics pipelines, OCSF ensures that telemetry grows predictably and stays compatible over time without repeated refactoring.

In other words, OCSF helps your products stay compatible. It also makes them more competitive and more appealing for enterprise clients who operate hybrid security ecosystems. However, gaining all these benefits requires careful planning and technical expertise. In the next section, we explore the most common challenges your team may face during implementation and analyze how they can affect your product’s development.

Read also

How to Enhance Your Cybersecurity Platform: XDR vs EDR vs SIEM vs IRM vs SOAR vs DLP

Get practical insights into building a more efficient cybersecurity ecosystem. Learn how to expand the capabilities of your platforms to achieve faster, smarter threat responses.

Key challenges in adopting OCSF

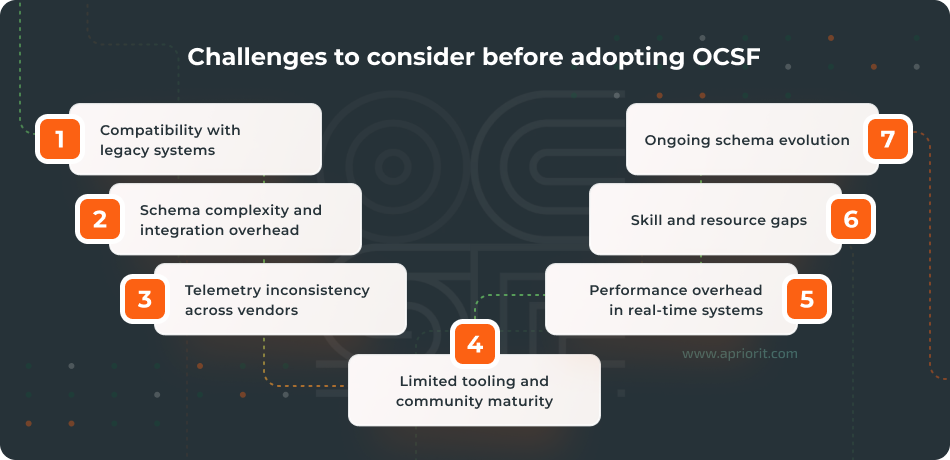

Adoption of OCSF is rarely a plug-and-play process. Implementing OCSF might expose architectural, operational, and organizational challenges that require careful planning to overcome. Let’s take a look at some of the most common issues that we encounter during real-world implementations.

- Compatibility with legacy systems. Many established cybersecurity products rely on proprietary data formats, tightly coupled parsing logic, and legacy ingestion pipelines. Introducing OCSF means rethinking how events are classified and stored, which can disrupt existing workflows or integrations.

For vendors with mature products, a full schema migration might be impractical. This, in turn, leads to the need for hybrid data models or intermediate mapping layers. Without such planning, teams risk creating parallel data flows that increase maintenance effort and fragment analytics logic instead of simplifying it.

- Schema complexity and integration overhead. Although OCSF is designed to simplify event standardization, its hierarchical taxonomy and strict naming conventions can be challenging to integrate into diverse, pre-existing data architectures. Teams must carefully decide how to fit product-specific telemetry into predefined categories without losing critical context or meaning.

In practice, this challenge manifests itself during implementation, when developers discover that mapping real-world product data to OCSF classes involves many subtle choices. Even small misalignments in schema mapping can ripple through detection pipelines, leading to unreliable insights and additional maintenance overhead.

- Inconsistent telemetry across vendors. Even within the OCSF ecosystem, different vendors may interpret schema fields differently or capture events at varying levels of granularity. For example, one vendor’s process creation event may contain far more context than another’s, even though both conform to the OCSF class definition.

These inconsistencies make cross-vendor analytics and normalization less reliable. They can also limit the effectiveness of unified threat detection models, especially in multi-source SIEM or XDR environments.

- Limited tooling and community maturity. Even though the OCSF initiative is gaining popularity, the ecosystem of supporting tools, documentation, and validation frameworks is still relatively young. Compared to mature standards such as STIX or ECS, OCSF lacks a wide selection of open-source converters, testing utilities, and schema validation pipelines.

This means your teams often have to build internal tooling or rely on minimal documentation, which increases adoption time and the risk of implementation errors.

- Performance overhead in real-time systems. Mapping and validating high-throughput telemetry against OCSF adds computational overhead, especially for real-time analytics engines like XDR, UEBA, or network monitoring solutions.

If the schema validation step isn’t optimized, it can introduce latency in data ingestion and event correlation, impacting detection speed and user experience.

- Skill and resource gaps. Since OCSF is still relatively new, engineers with direct experience in its architecture and implementation are rare. Organizations can also underestimate the learning curve, as OCSF requires specialized expertise:

- Understanding event hierarchies

- Defining mapping templates

- Maintaining internal documentation

Without a dedicated internal governance model, teams risk inconsistent schema use across components, which ultimately negates the benefits of standardization.

- Ongoing schema evolution. During its evolution, OCSF introduces new classes, updated attributes, and restructured relationships. On the one hand, this growth ensures that the framework remains relevant. On the other hand, it also means that vendors must continually track and adapt to new versions to stay compatible with partners.

Neglecting this can lead to version drift, where integrations begin to break or produce inconsistent results. Maintaining forward compatibility requires process discipline and often requires dedicated resources for monitoring schema updates.

Recognizing these challenges early allows your teams to plan realistic roadmaps, set proper expectations, and avoid costly rework later.

Read also

6 Emerging Cybersecurity Trends for 2025 to Futureproof Your Product

Stay ahead of evolving threats. Explore the latest cybersecurity trends shaping modern software development and learn how to build safer digital products.

How to implement OCSF the right way: Apriorit’s expertise

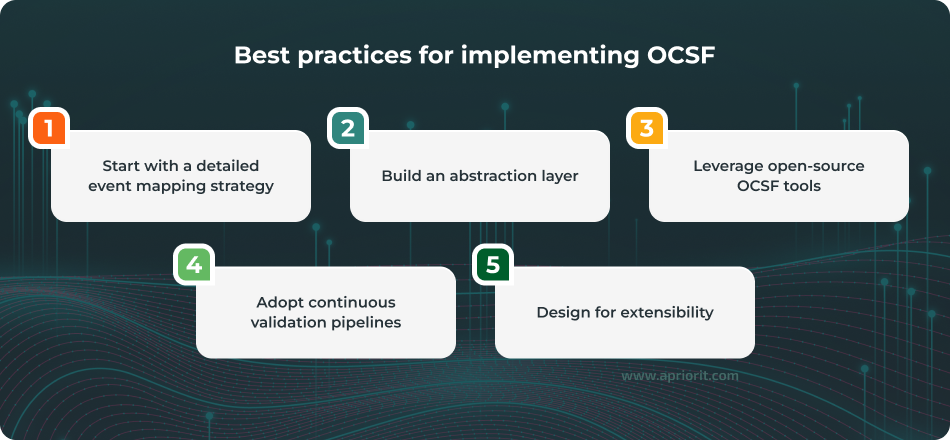

With correct OCSF implementation, you can reduce adoption risks and make sure that data quality is consistent across various components. Below, we give you five actionable insights based on our previous experience implementing OCSF when delivering advanced cybersecurity products:

- Start with a detailed event mapping strategy

Before writing a single line of code, your teams need to analyze how the existing event structure aligns with OCSF categories. To do this, you need to create a mapping document that shows the following:

- Which current event types already match OCSF classes

- Where you have mismatches or missing fields

- Which mappings are most critical for analytics and compliance

Why this matters: This baseline lets you identify gaps early and plan incremental adoption, starting with the most business-critical events. It also prevents loss of context during normalization.

- Build an abstraction layer between your data model and OCSF

Instead of hardcoding OCSF schemas directly into your product logic, you might want your team to introduce an intermediate abstraction layer. It will handle the transformation between your internal telemetry and the OCSF schema. Here is what an abstraction layer can bring:

- Simplified updates when the schema evolves

- Partial adoption, where you start with specific event classes and expand later

- More resilience to OCSF version changes

Why this matters: OCSF’s growth brings long-term relevance but also requires vendors to track and adapt to schema changes. A well-designed abstraction layer isolates your core logic from these updates.

- Leverage open-source OCSF tools

All official OCSF tools are free and open-source, making it easy to:

- Install them locally without waiting for customer-side licensing

- Quickly onboard new developers

- Validate event compliance during development

Why this matters: In our experience, open tooling significantly streamlines collaboration. Developers of different components can easily join schema discussions and reviews, since they only have to understand the OCSF layer and not the entire proprietary framework behind it.

- Adopt continuous validation pipelines

Integrating OCSF validation into your CI/CD process can help you set up automated checks to automatically verify that event data follows the expected schema and remains compatible with new releases. These pipelines can automatically detect version drift (when your implementation lags behind the latest schema) and alert your team before integrations start breaking.

These checks will also verify:

- Schema compliance and field naming

- Consistency of event structures across modules

- Backward compatibility after schema updates

Why this matters: Continuous validation helps you detect mapping errors early and avoid analytics issues or integration regressions after release. It’s a proactive way to maintain forward compatibility as OCSF continues to evolve.

- Design for extensibility

Even if your initial implementation covers only a subset of events, you need to plan your OCSF integration to support future growth.

Why this matters: A flexible design allows your product to evolve with customer requirements and new data types without major refactoring.

Example from Apriorit’s practice: Our team extended an existing OCSF schema with a new device event category, which required handling nested relationships like device → volume → volume event and complex cases like paired connect/disconnect events. Thanks to clear internal documentation, this feature was implemented and reviewed within a few days.

These best practices show what it takes to integrate OCSF successfully. Yet, many companies prefer to rely on an experienced technology partner to accelerate this process and avoid costly missteps. Let’s see how Apriorit can support your team at every stage of OCSF implementation.

Related project

Custom Cybersecurity Solution Development: From MVP to Support and Maintenance

Explore how Apriorit engineers designed and implemented a custom cybersecurity platform that streamlined threat detection, minimized attack surfaces, and ensured full data protection for enterprise systems.

How Apriorit can help with OCSF adoption

Implementing OCSF requires more than just technical adoption. It demands a deep understanding of telemetry structures, data flow optimization, and cybersecurity context. This expertise can be difficult to develop and maintain in-house, but you can easily find it at Apriorit. We help product vendors overcome major implementation challenges by combining hands-on experience with strategic architecture design.

Our team has delivered projects involving complex data normalization, real-time analytics, and cross-platform integrations — all essential for a successful OCSF rollout. Apriorit experts have:

- Top expertise in cybersecurity product development, from building and integrating SIEM, SOAR, XDR, and UEBA solutions to creating interoperable architectures that align with OCSF principles

- Proficiency in data engineering and schema design to ensure smooth mapping between your proprietary telemetry and OCSF taxonomies without losing valuable context

- A strong background in AI-driven analytics and automation, allowing us to leverage standardized data for smarter detection, response, and predictive insights

- In-depth knowledge of security frameworks like NIST, OWASP, and CIS to make sure your implementation supports both technical efficiency and compliance requirements

- Experience with designing scalable and future-ready data architectures, empowering your teams to integrate OCSF while maintaining flexibility to meet evolving standards

Together, we can make OCSF adoption seamless, efficient, and aligned with your business goals.

Conclusion

As cybersecurity solutions become more interconnected, adopting OCSF is increasingly essential for maintaining interoperability and keeping pace with industry leaders. It enables vendors to unify telemetry, simplify integrations, and deliver more consistent analytics across platforms.

With deep expertise in cybersecurity engineering, data management, and AI-driven analytics, Apriorit can help you adopt OCSF efficiently. We can achieve smooth schema migration, optimized data pipelines, and scalable architectures — empowering you to build future-ready security solutions.

Strengthen your product’s defense with Apriorit’s tailored cybersecurity services.

Trust Apriorit professionals to design, test, and maintain secure environments that safeguard your critical systems and sensitive information.

FAQ

How does OCSF differ from standards like STIX, OpenDXL, and ECS?

OCSF focuses on data normalization. Frameworks like STIX and OpenDXL are designed for threat intelligence sharing and message exchange. And whereas Elastic Common Schema (ECS) is tied to a specific product ecosystem, OCSF is vendor-agnostic and aims to unify telemetry formats across tools and vendors, not just within one platform.

How can legacy systems adopt OCSF gradually?

OCSF adoption doesn’t need to happen all at once. Many vendors start by mapping the most critical event types to OCSF while maintaining existing formats for less-used data. Over time, they can build translation layers or extend internal data models to achieve full compliance without disrupting production workflows.

Is OCSF suitable for ML-based anomaly detection?

Yes, OCSF has a consistent schema that simplifies feature extraction and correlation across diverse telemetry sources. When data from multiple tools follows the same structure, machine learning models can detect anomalies more accurately and with less preprocessing.

What tools support OCSF validation or conversion?

The OCSF community provides open-source validation scripts and mapping templates. Also, some vendors are integrating OCSF conversion utilities directly into their SIEM or data pipeline products.

How can cybersecurity vendors guarantee compatibility as the schema evolves?

OCSF is versioned, and new updates usually support backward compatibility. To stay current, organizations can:

<ul>

<li>Track schema releases</li>

<li>Integrate schema validation into CI/CD pipelines</li>

<li>Design data pipelines with modular field mappings</li>

</ul>

All of this allows the schema to be easily updated when the specification changes.