Maintaining high database performance is crucial for ensuring the efficiency and scalability of business operations. Writing well-structured SQL code and following database normalization principles are the foundation of quality data management, but these practices don’t always guarantee optimal database performance.

As databases grow in size and complexity, with increasing numbers of tables and relationships and increasing data volumes, even the most carefully normalized data structures can experience performance bottlenecks. In this article, we explore practical strategies to enhance the performance of normalized databases. By understanding potential pitfalls and learning how to navigate them, you can ensure that your database infrastructure not only supports but accelerates the accomplishment of your business goals.

This article will be useful for technical leaders who are responsible for data management in their organizations and are looking for techniques to improve database performance.

Contents:

Pros and cons of database normalization

Normalization is a widely accepted approach to organizing data in a relational database using tables, relationships, and attributes. This process helps database designers to, for example, avoid duplicating records or ensure that a table contains only relevant data.

Database normalization allows you to achieve several goals:

- Efficiently organize data

- Reduce the load on database servers

- Decrease the memory footprint

- Increase the speed of SQL query execution

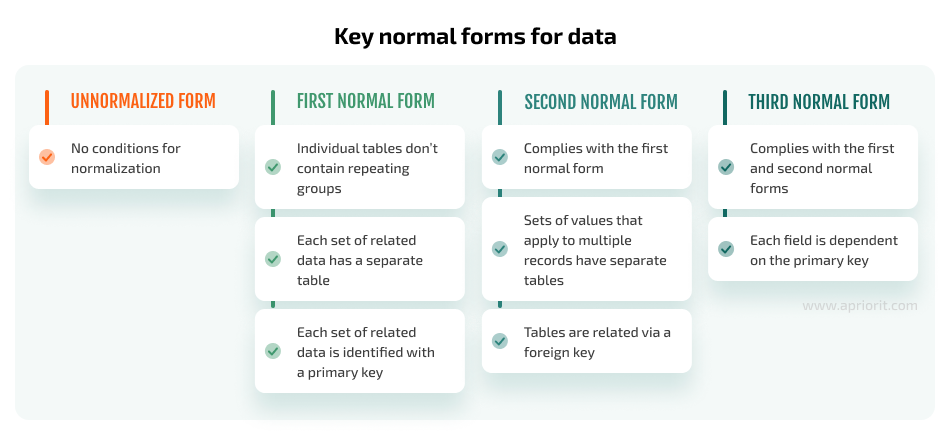

Database designers and project architects enforce normalization by adopting normal forms — rules for organizing data in tables. Designers choose a normalization form for a database based on the project’s requirements. There are many normal forms you can consecutively adopt, but most relational databases don’t go beyond the third normal form. By the time a database reaches this form, it doesn’t contain redundancies, stores data in a simple and decomposed manner, and ensures data atomicity. You can expect smooth and reliable performance from such a database.

A normalized database structure may even be undesirable for a small application and only add complexity. However, projects that deal with high volumes of data can’t operate efficiently without some level of normalization.

While normalization is generally a beneficial practice, there are situations where strict adherence to normalization rules may not be the best choice for a particular project or specific functionality. Here are a few reasons why following database normalization rules might be undesirable or even harmful:

- Performance issues. Sometimes you need to join normalized tables to retrieve distributed records. Excessive joins can impact performance, especially in projects that require many quick Read operations. Additionally, you need complex queries to get distributed data, and it can be challenging to optimize such queries. When data is distributed across multiple databases, the situation becomes even more complicated. Such database systems require a mechanism for maintaining data consistency, which can affect query processing speed — for instance, due to network latency.

- Complicated data modification. In normalized databases, instructions for adding, modifying, or deleting data can be very complex because they require changes across multiple tables. Such instructions not only complicate SQL scripts for changes but also increase the risk of deadlocks, when several queries try to lock on to the same resource and permanently block each other. You’ll need an experienced database specialist to prevent or eliminate deadlocks in a complex application.

A database with reduced performance undermines the efficiency and user experience of the whole application. And an application’s features, interfaces, and design don’t matter if it works slower than its competitors.

Luckily, there are ways to fix performance issues of normalized databases. First, let’s examine how to improve database performance with several optimization practices Apriorit.

Need to improve your data management solutions?

Partner with Apriorit for reliable security and flexibility of your data management.

Improving database performance

When a database underperforms, it’s worth examining the queries that don’t work as expected. Even if developers are sure they wrote well-structured and clear queries, there’s often room for database performance optimization. And well-structured queries aren’t always the best performing.

Analyzing and identifying issues in an SQL query can take a considerable amount of time, from several days to a week. To speed it up, we use certain practices to determine where a problem with a query is most likely to occur. Let’s consider some of them:

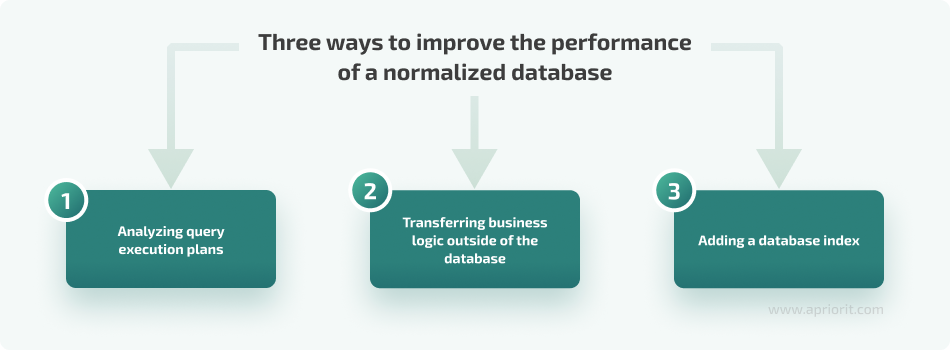

1. Analyzing query execution plans

An SQL server performs a separate operation for each part of an expression with the OR operator and eventually combines the obtained results and outputs them as a single result. When a developer writes a Join expression with OR operators, an SQL server has to perform as many joins as there are OR operators and then combine the results.

Let’s consider an example where two tables are joined and the JOIN instruction includes an OR operator:

SELECT DISTINCT

FirstName, LastName

FROM UserProfile

INNER JOIN EmployeeProfile

ON UserProfile.UserProfileId = EmployeeProfile.UserProfileId

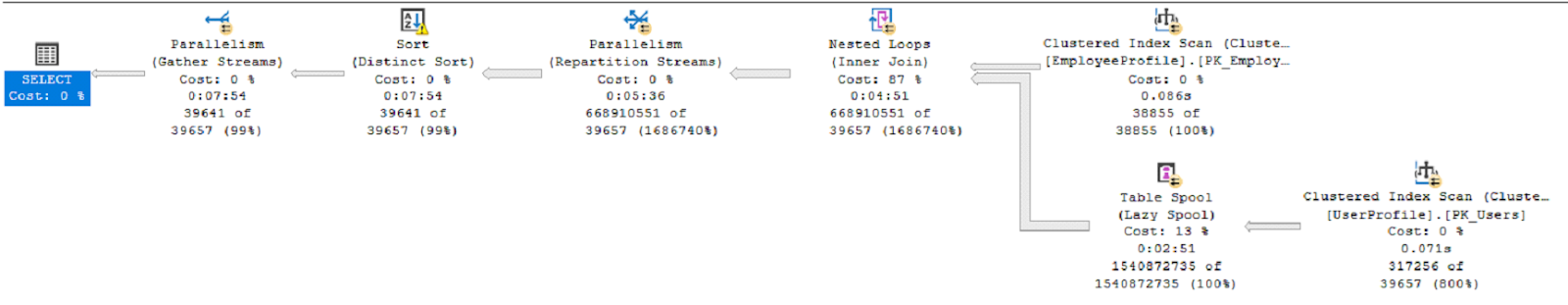

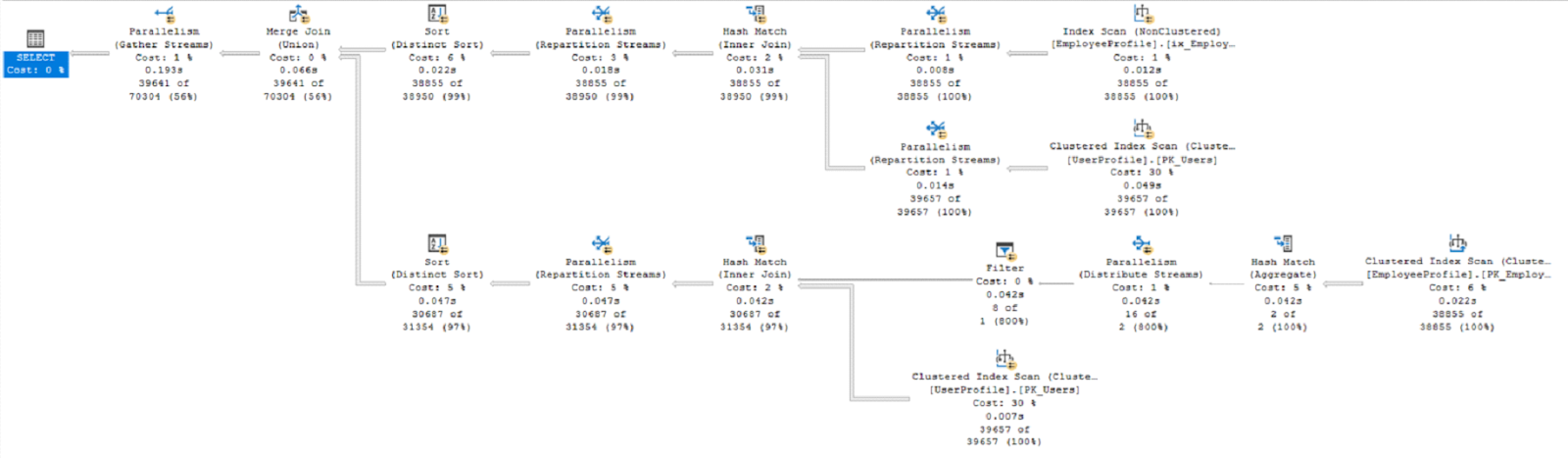

OR UserProfile.MobilePhone = EmployeeProfile.HomePhoneIn this example, it looks like the code simply has to scan the UserProfile (39657 records) and EmployeeProfile (38855 records) tables and return the results. But its execution looks like this:

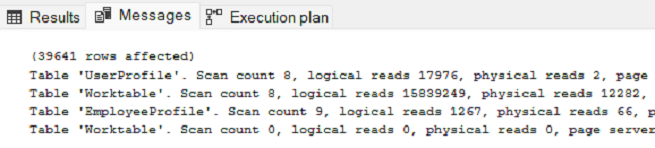

The server finishes two scans but struggles to process the OR conditions between multiple columns. The query takes almost eight minutes to execute.

Let’s see how we can improve database performance to help the SQL server process the query faster. For this example, it will be much more efficient to eliminate the OR operator and split the query into two almost identical queries but with different JOIN conditions. Then, we can add an operator that combines the results of the two queries. As a result, the query code will become less compact and possibly less understandable to a human. However, let’s see what comes out of this:

SELECT

FirstName, LastName

FROM UserProfile

INNER JOIN EmployeeProfile

ON UserProfile.UserProfileId = EmployeeProfile.UserProfileId

UNION

SELECT

FirstName, LastName

FROM UserProfile

INNER JOIN EmployeeProfile

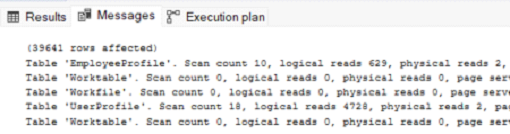

ON UserProfile.MobilePhone = EmployeeProfile.HomePhoneHere’s how the code executes:

This time, the server doesn’t have to read millions of pages from the cache, and it executes the query in less than one second.

Compared to the previous example, the query code is less structured from a developer’s perspective, but the performance is impressive. The SQL server finds this query much easier to execute because:

- Query expressions are straightforward, allowing the server to use indexes more effectively.

- Queries are independent, so the server can execute them in parallel.

OR is not the only operator that, when removed, can simplify the code for the server and achieve better results. Removing double IN or CASE operators can also improve query speed and resource use. However, getting rid of these operators makes a developer’s work harder as the script becomes longer and more difficult to read.

Related project

Supporting and Improving Legacy Data Management Software

Supporting and Improving Legacy Data Management Software

A data management solutions provider recruited us to help them modernize and support their legacy system. With our help, they made this system more reliable, improved user satisfaction, and freed enough resources to develop a new product.

2. Transferring business logic outside of the database

Stored procedures and functions help developers encapsulate business logic that is created at the database level. We can use these procedures and functions for:

- Access control

- Code reusability

- Performance optimization (e.g. pre-compilation and cached execution plans)

However, overreliance on procedures and functions to handle business logic can negatively impact database performance. For example, if one of the application’s functions parses imported data, it shouldn’t operate at the database level. The application should receive the data, process it, and then store it in the database. When exporting data, the stored procedure should only retrieve the data to be exported, while the application should perform all further actions.

Processing data at the application level reduces the load on the database and simplifies the understanding and maintenance of the business logic code. Architecture designers decide which actions to perform at which level depending on the project and available resources, but it’s generally a good practice to remove anything that can be executed at other levels from the database level.

3. Adding a database index

When analyzing a query execution plan, a developer may be tempted to add an index suggested by the execution plan analysis tool, such as SQL Server Management Studio. An index is a structure that helps to quickly look up entries in a database and search for specific data.

Indexes are generally useful tools, but overloading a table with indexes can degrade the performance of write operations (UPDATE, DELETE, INSERT). Interacting with an indexed column requires the SQL server to update the indexes, which takes some time. Additionally, storing indexes requires additional space and increases the size of database backups.

Before creating an index, it’s necessary to ensure that specific columns in the table require an index. Similar indexes might already exist, and modifying an existing index may provide the same performance improvement as creating a new one.

The three practices we described above help to improve database performance without major changes to its architecture or key data management principles. But in some cases they aren’t enough to achieve satisfactory performance and you need more drastic measures like denormalization. Let’s explore what denormalization is and how it can help you achieve high database performance.

Read also

How to Ensure SaaS Data Security with a Curated Database Design

The choice of database structure greatly impacts both the performance and security of a database. Apriorit experts offer tips on data architecture design to find the balance between database properties that fits your business goals.

Denormalizing a database

While database normalization is a proven and widely used design process, denormalization is less common and should be applied with caution.

Denormalization is a database optimization technique used during database design that adds irrelevant data to tables, contradicting normal forms. Although denormalization is the opposite of normalization, it is not a reverse process; rather, it is a technique that can be applied after normalization.

Denormalization of a database or specific tables can help:

- Improve database performance. Denormalization creates summary tables that aggregate data from other tables, significantly speeding up data retrieval for analytics or reporting. A SQL server needs less time to execute a query when it pulls data from a single table rather than multiple tables.

- Simplify data retrieval queries. Queries to a denormalized database contain fewer conditions and often don’t require joining tables. Writing such queries takes less of a developer’s time and reduces the risk of introducing bugs.

However, when used in excess or by an inexperienced database developer, denormalization can result in a variety of negative consequences:

- Increased data redundancy

- Need for more data storage and larger backups

- Risk of data inconsistency

- Slow Write operations

- Complex data updates and maintenance

A more sophisticated approach to data management can retain normalization while also maintaining denormalized data. If data inconsistencies occur, this parallel structure allows for restoring denormalized data from the normalized database. For instance, one database can store data in a denormalized format for reporting and analysis, while another normalized database is used for adding, deleting, or modifying data. Developers can update denormalized data from the second database with a specific job.

Conclusion

Data management experts can try optimizing database performance on two levels: design or utilization. Depending on the specifics of a particular database, you may need to only use one or combine both of these approaches.

Apriorit’s data management specialists have seen many cases where a database needed a nuanced and original approach to data organization and queries. In this article, we examined a couple examples and offered practices that can significantly improve database performance.

Our data management specialists know how to analyze any type of database and can suggest efficient ways to improve its security and productivity. We can also build a new data management solution from scratch that will fit your requirements and keep your database performance at a consistently high level.

Have challenging data management tasks?

Outsource them to Apriorit’s experts and ensure your solution’s performance, security, and productivity.