Key takeaways:

- Open-ended inputs, dynamic context, and dense integrations expand the attack surface for GenAI solutions.

- Meeting requirements of the EU AI Act, the GDPR, ISO/IEC 42001, and NIST AI RMF is essential for delivering a secure and compliant GenAI solution.

- Data poisoning, data exfiltration, prompt injection, and model theft are among the major security risks for GenAI solutions.

- Measures such as the principle of least privilege, input/output sanitization, data minimization, data provenance, and continuous observability can minimize these risks.

Security boundaries that work for traditional machine learning (ML) models aren’t as effective when applied to generative AI (GenAI) models.

Instead of curated datasets and static flows, GenAI systems handle open-ended prompts, pull data from live and often unvetted sources, and interact with external tools and APIs that can change their behavior overnight. This unpredictability has a direct impact on the security of generative AI architectures.

Moreover, failing to handle this unpredictability properly and at the right time can lead to issues ranging from expensive retrofitting to critical compliance blockers.

With this article, we aim to help technical leaders and development teams working on AI-powered projects learn how to make informed choices to build a resilient, compliant, and secure GenAI architecture.

Contents:

- Why GenAI security demands a new perspective

- What is a generative AI architecture?

- Business risks of insecure GenAI systems

- GenAI security risks across the architecture and beyond

- Best practices for building a secure GenAI architecture

- Build a secure and compliant GenAI project with Apriorit experts’ assistance

- Conclusion

Why GenAI security demands a new perspective

GenAI shifts security from protecting a static model to defending a continuously changing environment — and businesses working on GenAI solutions need to adjust their approach accordingly.

With GenAI systems, security risks emerge both before and long after deployment. In fact, Gartner predicts that by 2027, over 40% of AI-related data breaches will stem from the misuse of GenAI.

To adapt to evolving threat conditions, GenAI systems require fluid security controls:

- continuous input validation

- granular model behavior constraints

- ongoing monitoring of how contextual changes affect output safety

- and more

Without these controls, GenAI becomes an attractively easy target for hackers who might leak sensitive data through crafted prompts, generate manipulated or harmful content, or exploit integrations to get even more access within your system.

That’s why we need to treat GenAI security differently from the security of non-generative AI systems. That’s also why frameworks like the EU AI Act introduce stricter documentation requirements and risk management expectations for GenAI systems than for other AI and ML solutions.

Some unique features of GenAI systems can already be seen at the architectural level, so let’s take a look at them.

Want to upgrade your product with GenAI capabilities?

Work with the Apriorit team to design, train, and integrate generative AI solutions that strengthen your operations and open new revenue opportunities.

What is a generative AI architecture?

And how is it different from the architecture of non-generative ML systems?

A GenAI architecture is the foundation of any generative AI system, enabling the AI model to process data, learn from it, and generate outputs. At a high level, GenAI systems include the same architectural layers we see in any machine learning system:

- Infrastructure layer — includes essential hardware and software resources needed to build, train, and deploy a GenAI model

- Data processing layer — where raw data is transformed into a format the AI system can work with

- Model and training layer — where algorithms process data and generate outputs

- APIs and integrations layer — connects GenAI applications and LLMs with backend systems and third-party services

- Application layer — consists of agents and UX components that make the system easy to interact with for end users

So, what makes these systems unique are not the particular GenAI architecture components, but rather the way they operate and connect.

Table 1: Differences between ML and GenAI systems

| Component | Non-generative ML systems | GenAI systems |

|---|---|---|

| Input | Curated | Open-ended prompts |

| Context | Bounded datasets | Retrieval of external data at runtime |

| Output | Deterministic, highly structured outputs | Non-deterministic outputs that require additional validation |

| Integrations | Limited batch pipelines | Extensive use of third-party APIs, plugins, and orchestrators |

| Runtime | Static inference graphs | Dynamic agent flows |

The dynamic, interconnected, and unpredictable nature of GenAI systems significantly expands their attack surface. It also makes legacy AI security methods, such as standard data validation and model isolation, less effective for securing GenAI solutions.

Consequently, failing to determine and apply proper security measures when planning and creating the architecture for your next GenAI project may have both financial and legal implications for your business.

Let’s see what some of the risks and consequences might be.

Read also

Challenges in AI Adoption and Key Strategies for Managing Risks

Address key obstacles in AI implementation with practical guidance that helps you streamline adoption, reduce risks, and make informed technical and strategic decisions.

Business risks of insecure GenAI systems

Without proper safeguards implemented at the architectural level, even minor security gaps in GenAI systems can become severe liabilities, ranging from operational downtime to compliance violations.

Below, we analyze what consequences your business must be prepared to deal with if you plan on working with GenAI:

1. Manipulated model behavior — A compromised GenAI system can be dangerous to its end users if malicious actors manipulate it to generate inaccurate, biased, or misleading outputs. This is especially critical in sectors such as healthcare and finance, where inaccurate model outputs can lead to unreliable recommendations or discriminatory treatment, triggering investigations and damaging a brand’s reputation.

2. Intellectual property (IP) rights and privacy violations — GenAI models with poor data protection and prompt filtering mechanisms can lead to the exposure of proprietary models, confidential datasets, or personally identifiable information. This puts your business at risk of violating laws and regulations such as the GDPR, the EU AI Act, and HIPAA (for healthcare).

3. Data loss, exposure, or exfiltration — GenAI systems are useless without data. If your system relies on insecure API endpoints, outdated logging mechanisms, or unreliable integrations, that data becomes vulnerable. Hackers can manipulate or expose your model’s training data or any other data it has access to, leaving your business with both compliance and operational complications.

4. Operational disruptions — Security gaps in GenAI pipelines, model hosting, or third-party integrations can trigger cascading failures across connected systems. In complex enterprise environments, such failures are hard to localize and can result in prolonged downtime, breaches of service-level agreements, and costly incident recovery efforts.

5. Legal and regulatory non-compliance — When GenAI systems lack governance, clear documentation, and traceability, it may be difficult for your business to demonstrate compliance with relevant laws and regulations. Missing risk assessments, opaque data flows, and poor logging can all be interpreted as inadequate controls during audits.

6. Reputational damage — Regardless of the root cause, any publicized GenAI incident will affect your brand image and may cause mistrust among your clients, partners, and investors. And once stakeholders start questioning your AI governance, rebuilding their confidence may take even longer than handling the initial incident.

7. Financial losses — The financial impact of insecure GenAI is rarely limited to a single fine or incident. Businesses may face regulatory penalties, legal fees, compensation claims, forensic and remediation costs, and increased insurance premiums. Plus, there are indirect losses from operational downtime, halted projects, and lost customers.

Together, these risks show that GenAI security is not just a technical concern but a matter of operational resilience. Yet, to effectively address them, your team will need to determine not only what mitigation tactics to apply but also when and where to apply them.

Related project

A Smart Solar Grid: Using AI for Energy Storage and Trading

See how Apriorit specialists enhanced complex energy infrastructure with AI capabilities. We built tailored AI modules that transformed grid monitoring, helped our client automate critical energy processes, and provided actionable insights on grid operations.

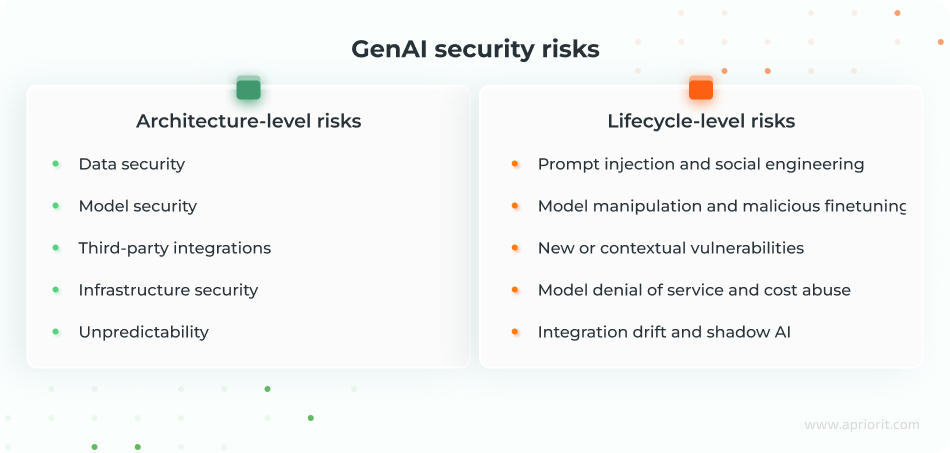

GenAI security risks across the architecture and beyond

There are different approaches for identifying security risks to GenAI systems. OWASP Top 10 for LLM Applications, for example, assesses the most common threats an LLM-based system may encounter.

However, if your goal is to design a secure and resilient architecture, you may want to adjust your team’s focus. Not every GenAI risk can or should be addressed at the architectural level — and your team needs to account for that.

Structural threats require an early response and can become extremely expensive to mitigate after your system architecture is designed and finalized. Other threats are highly dependent on changes in user behavior, data context, and adversarial techniques, so they can only be handled at later stages of the model’s lifecycle.

Let’s analyze these two categories of risks in detail.

Risks to address at the architectural level

These risks stem from how components of generative AI architecture connect, what data they handle, and how the system is exposed to internal and external actors:

Data security — GenAI systems often rely on large and heterogeneous datasets, which may include external content used for pretraining, fine-tuning, or retrieval. If these sources are tainted or unvetted, attackers can inject malicious or biased data that will gradually adjust model behavior. At the same time, training or grounding datasets may contain sensitive records (PII, PHI, trade secrets, etc.) that can later surface in model outputs or logs.

Common threat vectors for GenAI systems include data poisoning, unvetted source data, and training data leakage.

Model security — Attackers may attempt to calculate a GenAI model’s parameters or reconstruct its decision boundary through systematic querying and analysis of input–output pairs. In more advanced scenarios, they can try to infer whether specific records were part of the training set, exposing details about individuals or proprietary datasets.

Typical threat vectors are model theft and model inversion attacks.

Third-party integrations — GenAI systems frequently depend on external APIs, plugins, and SaaS connectors to access data and use tools. Each integration introduces new credentials and traffic patterns.

These risks are often caused by token misuse or insufficient rate limiting. If access tokens are over-privileged, poorly scoped, or reused across multiple services, attackers will only need to compromise one component in order to get access to the whole system. Insufficient rate limiting also opens the door to abuse scenarios such as automated scraping, cost inflation, and resource exhaustion.

Infrastructure security — The environment in which models and supporting services run is another critical risk area. Publicly exposed endpoints, network segments with excessive permissions, weak key management, and insecure use of shared secrets increase the likelihood of unauthorized access. Misconfigured storage, logging, or container orchestration components can further expose data, models, or internal services to actors who should never reach them.

Unpredictability — GenAI systems accept free-form prompts and may chain multiple tools or calls at runtime. As a result, their behavior can be hard to predict. Malicious or simply malformed inputs can lead to irrelevant, confusing, or unsafe outputs, especially when the system is allowed to interact with external content or use connected tools and execute actions on behalf of users.

These are only the major groups of architecture-level risks that your team needs to be aware of. However, not all GenAI risks can be fully designed out at this stage of the model’s lifecycle. Let’s analyze what risks are too dynamic for your team to efficiently mitigate at the architectural level alone.

Read also

Adopting Generative AI in Healthcare: Key Benefits, Use Cases, and Challenges

Discover how generative AI can transform healthcare systems by improving data workflows, supporting clinicians with intelligent insights, and enabling more efficient operational processes across medical organizations.

Risks to address beyond the architectural level

While architectural decisions can reduce your model’s exposure to threats, there are still risks that play out over time and are closely tied to how your system is used and maintained.

These risks include:

Prompt injection and social engineering — Attackers can shape the model’s behavior through crafted instructions (injections) delivered either directly by users or indirectly through retrieved content, such as poisoned web pages or documents.

Social engineering adds a human layer, where users are convinced to input sensitive information or unsafe instructions into the system. Both prompt injection and social engineering attacks exploit the model’s tendency to follow user instructions and the trust users place in AI-assisted workflows, making these workflows highly vulnerable.

Model manipulation and malicious fine-tuning — Over time, small shifts in training data and configurations can gradually change how a model behaves. Malicious or low-quality fine-tuning data can introduce systematic biases, weaken safety constraints, or optimize the model toward an attacker’s goals. Because these changes often appear as a gradual drift rather than a single event, they are difficult to detect by looking only at static architecture or configuration snapshots.

New or contextual vulnerabilities — Hackers constantly experiment with novel ways to bypass safeguards, chain multiple tools or plugins, or exploit previously unknown weaknesses in model APIs and supporting libraries. These vulnerabilities frequently depend on specific prompts, data contexts, or integration setups that you can’t anticipate when designing the initial architecture of your GenAI system.

Model denial of service and cost abuse — GenAI workloads can be resource-intensive, making them attractive targets for denial-of-service scenarios focused on tokens and compute usage. Carefully crafted prompts or automated traffic can drive excessive token use, increase latency, and inflate operating costs. Because these attack vectors are tied to real-time usage and scale, they are difficult to fully anticipate at design time.

Integration drift and shadow AI — As systems evolve, teams may add new connectors, tools, or prompt flows outside of formal review processes. Over time, the as-designed architecture diverges from the as-deployed reality, creating shadow GenAI usage and untracked data flows. This drift can introduce unplanned dependencies, weaken boundaries between environments, and complicate efforts to understand where data goes and how decisions are actually made.

Now that you better understand when to address specific risks, let’s talk more about strategies for mitigating them.

Watch webinar

AI Chatbots Security Frameworks for Business Success

Learn how to enhance your chatbot architecture with proven security practices that reduce risks and support stable integration into your business systems.

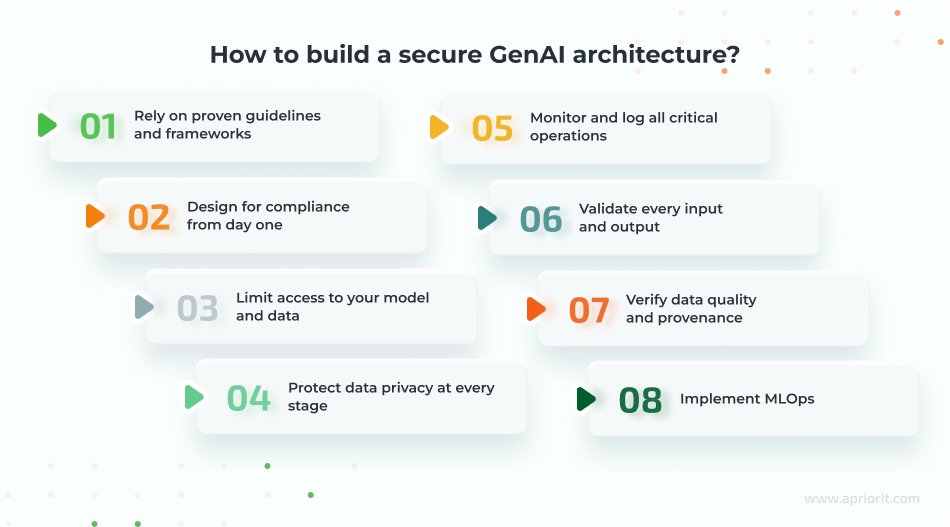

Best practices for building a secure GenAI architecture

Want to know how your team can ensure proper security of your GenAI architecture from day one?

We have eight recommendations:

1. Rely on proven guidelines and frameworks

Established frameworks help teams avoid blind spots and ensure alignment with the expectations of both auditors and enterprise security leaders. As a starting point for designing a secure GenAI system architecture, you can rely on these guidelines and frameworks:

- OWASP Top 10 for LLM Applications

- NIST AI Risk Management Framework

- AI Trust, Risk and Security Management (AI TRiSM) framework by Gartner

Referring to these resources during architecture design and threat modeling will help you clarify decision-making, accelerate design reviews, and ensure consistent terminology across teams and vendors.

2. Design for compliance from day one

GenAI systems operating in regulated industries must demonstrate compliance with rules that govern data protection, model behavior, and documentation quality. Key frameworks you may need to comply with include:

Additionally, your team should account for any industry-specific requirements, such as HIPAA for healthcare or PCI DSS for finance.

It’s important to address relevant compliance requirements when clarifying specifications for your system, selecting vendors, and planning the data architecture. This way, you can prevent costly rework later on and support the audit readiness of your solution.

3. Limit access to your model and data

Your system’s most valuable assets — all data stores, model endpoints, and integration points — require granular security and protection from unauthorized access. To achieve this, you can enforce zero trust, build and implement robust policies for role-based access, and apply advanced authentication and authorization mechanisms.

Implement these controls during environment setup and CI/CD configuration so that new services inherit secure defaults automatically. This approach will help your team reduce risks such as model theft, unauthorized data access, and token or scope misuse through third-party tools.

4. Protect data privacy at every stage

GenAI workloads often process sensitive data like customer records, internal documents, or regulated information. To secure them effectively, your team needs to apply privacy-preserving techniques such as:

- data minimization

- pseudonymization

- anonymization

You can also implement mechanisms for PII/PHI detection and redaction at the ingestion, retrieval, and output layers of your system.

5. Monitor and log all critical operations

Visibility is essential for detecting misuse, investigating incidents, and supporting audits. That’s why your team needs to establish continuous monitoring and logging for all security-relevant events.

In particular, you want to capture security-relevant telemetry such as prompt and response metadata, tool calls, connector scopes, model and version IDs, token spend, safety events, and enforcement results.

Another important security improvement is to watch for anomalies like potential exfiltration patterns, prompt injection signatures, and cost spikes to timely detect and address possible incidents.

6. Validate every input and output

Due to the nature of GenAI systems, both their inputs and outputs must be treated as untrusted by default.

To prevent malicious instructions or unvetted content from flowing into the model, your team can turn to such techniques as:

- structured input validation

- content filtering

- context isolation

It’s also important to validate and sanitize your model’s outputs before they reach downstream systems or end users.

Implementing these controls at the application boundary (where the model interacts with external components) helps to address risks of prompt injection, insecure output handling, and data leakage.

7. Verify data quality and provenance

Unvetted or low-quality data can significantly degrade model behavior or even introduce security vulnerabilities. To prevent that, your team needs to enforce data provenance tracking, source allow-lists, and lineage verification so that your GenAI system only uses trusted data.

By applying relevance scoring and integrity checks to content used for retrieval or fine-tuning during data engineering and retrieval augmented generation (RAG) pipeline design, you can reduce poisoning risks and improve both system safety and reliability.

8. Implement MLOps

To catch and mitigate potential model drift, regressions, or unintended behavior changes in a timely manner, it’s important to establish proper machine learning operations (MLops) workflows. This includes implementing model versioning, rollback workflows, and scheduled evaluations for your GenAI system.

Make reviews for updates to prompts, connectors, and tool integrations a regular practice, and maintain clear documentation for auditability. These processes should be established in pre-production and continued throughout post-launch operations to ensure the system adapts safely as usage grows.

By implementing these practices, your team can enhance the architectural security of your GenAI system and reduce the likelihood of a breach. Depending on the system’s complexity and regulatory scope, you may need expert assistance from external teams experienced in GenAI security engineering and compliance to implement these controls effectively.

Read also

Building a Secure AI Chatbot: Risk Mitigation and Best Practices

Enhance your approach to AI-driven communication using step-by-step recommendations from Apriorit. Follow our advice to reduce security risks, strengthen system stability, and help your chatbot operate responsibly at scale.

Build a secure and compliant GenAI project with Apriorit experts’ assistance

As a full-cycle software development company, Apriorit will provide you with the exact expertise your project needs to tackle high-stakes GenAI tasks. You can turn to us for:

- Strategic AI consulting to assess the need for AI integration and plan it in a secure and cost-efficient manner

- Custom GenAI development to deliver non-trivial generative AI solutions that will differentiate you in the market

- AI and ML development to move beyond the capabilities of off-the-shelf AI solutions and integrate innovative technologies

- Cybersecurity engineering to help your team implement a security-first approach not only for your GenAI system but also for related infrastructure and workflows

- Security audits and penetration testing to emulate GenAI-specific attacks as well as to assess and improve the integrity and resilience of your solution

Working with Apriorit will help you ensure that the GenAI-powered solution you build is well-protected, fully compliant, and competitive.

Conclusion

Securing a GenAI system begins with its architecture. How data is collected and transformed, how models are accessed, and how external services connect all shape the system’s overall cybersecurity posture. Factors such as unbounded user input, reliance on dynamic data sources, and expanding integration layers make GenAI environments inherently more exposed than traditional ML solutions.

One way businesses can address these risks is by making security an integral part of their GenAI solution’s architecture. This is particularly valid for risks stemming from insecure integrations and access misconfigurations.

Other risks, such as model drift and behavior manipulations, require measures that should be applied not only at the architecture design stage but throughout the system’s entire lifecycle.

With Apriorit’s architectural expertise and hands-on experience in secure AI development, we can help your team navigate this complexity. Our dedicated GenAI development team will gladly assist you with designing resilient GenAI systems, validating data and model security, and maintaining integrity as your system grows.

Need expert guidance on GenAI adoption?

Transform your operations with strategic AI consulting! Collaborate with our experts to assess your processes, identify high-impact AI opportunities, evaluate technical feasibility, and build a realistic implementation plan for your next GenAI project.

FAQ

How do the GDPR and the EU AI Act impact GenAI architecture design and audits?

<p>Both frameworks push you toward privacy-by-design and traceability. Architectures must support data minimization, clear data lineage, and control of where and how personal data is used in prompts, training, and logs. </p>

<p>You’ll also need documented risk assessments, model and dataset versioning, and data governance processes to keep your system transparent and easy to audit and assess.</p>

What are best practices for continuous monitoring and incident response in GenAI systems?

<p>Continuously monitor how your GenAI system is used: prompts, responses, tool calls, model versions, and unusual cost or latency spikes.</p>

<p>Additionally, prepare incident response scenarios tailored to GenAI. These should include playbooks for disabling risky capabilities, rolling back models or prompts, and investigating detected incidents.</p>

<p>You may also want to implement anomaly detection within your GenAI system. To do this, you’ll need to define what normal behavior of your system looks like and configure alerts on suspicious behavior such as unexpected data access or strange sequences of tool use.</p>

How can I protect against prompt injection, model leakage, and data poisoning?

<p>You’ll need a combination of design rules, access policies, and ongoing checks applied at different points in the lifecycle of your GenAI system.</p>

<p>For prompt injection, focus on how inputs are accepted and how outputs are allowed to affect other systems. </p>

<p>For model leakage, consider who can access models, embeddings, and logs, and what those components might reveal. </p>

<p>For data poisoning, pay attention to where training and retrieval data comes from and how it changes over time.</p>

Should I build a custom model or use a managed LLM API?

<p>When choosing between custom GenAI models and managed LLM APIs, control and speed become decisive.</p>

<p>Managed LLM APIs are faster to adopt and easier to operate, but they give you less visibility into training data, internal safeguards, and data residency. </p>

<p>Hosting or customizing your own model offers stronger control over privacy, configuration, and compliance, but it demands more infrastructure, expertise, and ongoing maintenance.</p>

<p>You can also consider combining both: managed APIs for low-risk or exploratory use cases, and more controlled deployments for sensitive workloads or regulated environments.</p>

How can I detect model compromise or poisoning?

<p>Look for changes in model behavior and usage patterns. Common symptoms include:</p>

<ul>

<li>Drifts in output quality or safety</li>

<li>Unusual responses to well-known prompts</li>

<li>Unexpected increases in token usage or latency</li>

<li>Shifts in the mix of data sources feeding the model</li>

</ul>

<p>Security signals such as repeated policy violations, strange tool calls, or access from unusual locations are also important indicators.</p>