Whether you’re about to start developing a new IT product or plan to expand an existing solution, keeping up with the latest software development trends is vital. Learning what users expect to see in software can help you provide desirable functionality, ideally before your competitors do. And knowing which technologies are on the rise can help you choose the best tools for making your solutions work even better and releasing them faster.

Since every technology takes at least a couple of years to evolve and show results, many tech trends remain the same for years in a row. In this article, we consider five trends that will influence the way businesses develop software in 2026.

Our choices are based on our own market research, insights from leading global tech conferences and tech community gatherings, and conversations with clients and partners.

This post will be interesting for software project leaders who are looking for ways to enhance and update their solutions but aren’t sure which trends will suit their projects — and when to expect new opportunities.

Contents:

- 1. AI technologies for smart software creation

- 2. Cybersecurity implementation timeline shifts

- 3. The platform engineering approach gains traction

- 4. Go and Rust win the performance race

- 5. Clouds and containers become a must

- A few more trends to keep an eye on

- Stay on top of the latest trends with Apriorit

1. AI technologies to use now (and to expect soon) for smart software creation

AI tools already take care of routine coding tasks, generate tests, and draft project documentation. And the trend for actively using AI in software development will only grow and evolve. Gartner predicts that 90% of enterprise software engineers will use AI code assistants by 2028.

To enhance and automate modern software development processes, software engineers already use:

- Generative AI tools like GitHub Copilot, AlphaCode, OpenAI Codex, GPT-4, and Amazon CodeWhisperer

- Custom-made or third-party LLMs for assisted coding

- External APIs like DALL-E for image generation

- And more

Why is AI so popular in software development?

By saving developers’ time, AI tools allow project leaders to gain business benefits such as a lower cost of software development in the long run, shorter time to market, and better overall user experience.

AI adoption in software development is starting to pay off. According to the McKinsey Global Survey on the state of AI (June–July 2025), many organizations see measurable cost reductions from AI use cases, particularly in software engineering and IT. Here’s how the savings break down, showing the percentage of respondents for business units:

| Cost decrease | Software engineering | IT |

|---|---|---|

| ≥20% | 7% | 8% |

| 11–19% | 14% | 10% |

| ≤10% | 35% | 36% |

Though most companies see modest savings, a growing share are signaling that AI is moving from experimentation to real impact.

One of the reasons why other organizations see no changes (or even increased costs) might be expenses of AI adoption: Some tools are pricey, and developers need time to adapt existing solutions to project needs.

Note: It’s crucial to understand that AI works best as an assistant to — not a replacement for — your existing team. AI tools lack real human expertise. They also may provide inaccurate results, show data bias, and even lead to leaks of sensitive data and intellectual property. Consider Gartner’s scenarios for human–AI collaboration at work to effectively organize AI use within your teams.

So, what AI trends can you expect in 2026?

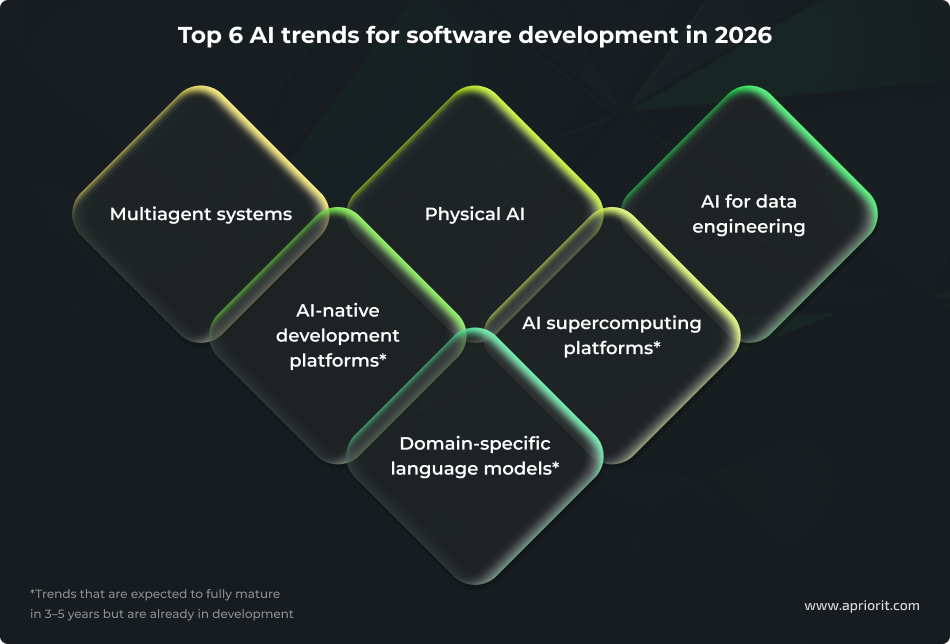

Below, we break down the six most promising AI technologies that we expect to evolve in the near future according to Gartner’s strategic technology trends, our own observations, and requests and feedback from our clients.

1. Multiagent systems (MAS) are collections of specialized AI agents that collaborate to handle complex workflows. Each agent performs a specific task, helping you achieve better efficiency, scalability, and interoperability compared to monolithic AI solutions. To efficiently and safely work with MAS, developers will need to design modular systems and implement observability tools to monitor agent interactions, ensuring reliability and compliance.

2. Physical AI brings intelligence into real-world environments through robots, drones, and smart devices that sense, decide, and act. Developers will need to build software that integrates AI with IoT, robotics, and edge computing. This includes simulation tools, digital twins, and orchestration platforms for fleets of devices to ensure safety and regulatory compliance.

3. AI for data engineering mostly means automating tasks like ETL pipeline creation, schema optimization, and anomaly detection. AI copilots and large language models (LLMs) are becoming foundational layers in data infrastructure, enabling autonomous workflows and proactive monitoring. For software development, this means faster, more reliable data pipelines and real-time analytics. Developers will need to design systems that integrate AI-driven data operations, support synthetic data generation for privacy, and ensure compliance with regulations like the GDPR and the EU AI Act.

Note that these last three trends are expected to fully mature in three to five years but are already in development and worth your attention:

4. AI-native development platforms leverage generative AI to assist with software creation, enabling developers to build applications with minimal manual coding. They will support prompt-based development and orchestration of AI agents, allowing small teams to deliver multiple projects simultaneously. Developers will need to design such platforms and then adopt new practices like prompt engineering, AI governance, and automated code reviews to ensure security and compliance.

5. AI supercomputing platforms will combine high-performance computing, specialized processors, and scalable architectures to train and run massive AI models as well as to handle data-intensive workloads. Software teams will be tasked to use hybrid orchestration, adopt open standards, introduce new compute paradigms, adopt hybrid platforms and composable architectures, and design security and compliance strategies at the system level.

6. Domain-specific language models (DSLMs) are AI models trained on specialized datasets. They offer higher accuracy and compliance than generic LLMs, which is especially important for critical workflows like finance, healthcare, and HR. When developing DSLMs for real-life products, it’s best to target workflows where generic LLMs underperform. For DSLMs, software developers will need to implement robust privacy and quality controls.

Looking to accelerate your AI initiatives?

Partner with Apriorit experts to turn complex algorithms into real business outcomes fast and securely.

2. Level up your cybersecurity measures, preferably from the very beginning of the project

Cybersecurity buzzwords like security-first development, secure by design, and secure software development lifecycle (SDLC) keep popping up in trend lists year after year.

But what exactly will change in 2026 with regard to how we treat security measures?

Here are a few reasons for your team to pay particular attention to cybersecurity when developing software in 2026:

- Because of the AI boom, LLMs are now deeply embedded in everything from customer interactions to internal operations. According to the OWASP Top 10 for LLM Applications 2025, developers and security professionals keep discovering new LLM vulnerabilities. Thus, software engineers have to think of ways to counter identified vulnerabilities and strengthen software defences to mitigate yet undetected threats.

- Attackers are now targeting weaknesses buried deep within supply chains and AI development pipelines. Meanwhile, regulators in the US and Europe are demanding unprecedented levels of transparency from companies selling IT products to governments or regulated industries. These factors make cybersecurity efforts simultaneously complex and confusing.

- Last but not least, due to the rapid rise of AI driving acute issues for organizations in terms of cybersecurity, data governance, and regulatory compliance, Gartner is warning internal auditors to expect a particularly heavy workload in 2026.

For software development companies, all these points mean that security and compliance can no longer be treated as afterthoughts ー they must be embedded into every stage of the development lifecycle.

Let’s break down the top cybersecurity practices for modern software development in 2026:

- Adopt a secure SDLC. A secure SDLC is a complex approach that embeds security practices at every stage of development, from planning and design to deployment and maintenance. Its primary goal is to identify and mitigate security vulnerabilities early in the development process.

- Shift towards DevSecOps. The DevSecOps approach extends DevOps principles by integrating security into the CI/CD pipeline. It promotes a culture where security is a shared responsibility among development, operations, and security teams. DevSecOps is crucial when you want to implement strong protection mechanisms without slowing down development or delaying releases.

- Implement a zero-trust approach. Regardless of whether a request to access data, devices, or apps originates from inside or outside the organization’s network perimeter, it’s crucial to verify it before allowing it. Core principles of zero trust include explicit verification, least privilege access, micro-segmentation, and continuous monitoring.

- Conduct regular security testing and audits. One of the best ways to improve your software protection is by testing it. Practices like security testing, penetration testing, and security audits can help you identify a solution’s vulnerabilities and fix them before malicious actors get a chance to exploit them.

- Generate SBOMs. A software bill of materials (SBOM) — a detailed inventory of all components, libraries, and dependencies used in your software — is crucial for security. SBOMs provide transparency into the software supply chain, helping your teams quickly identify vulnerabilities, outdated packages, and compromised components. With increasing regulatory requirements and the rise of supply chain attacks, SBOMs are becoming essential for compliance, risk management, and proactive security.

- Deploy sophisticated observability platforms. These platforms rely on OpenTelemetry for vendor-neutral telemetry collection and integrate metrics, logs, traces, profiling data, and LLM monitoring. They provide autonomous monitoring, predictive anomaly detection, and remediation across multi-cloud environments.

- Align with expert frameworks and pledges. Following established frameworks such as the NIST Cybersecurity Framework 2.0, the Open Cybersecurity Schema Framework, and the Secure by Design Pledge ensures consistency, compliance, and resilience. These frameworks offer proven security controls, methods, techniques, and tools. Adhering to them helps organizations meet regulatory requirements, improve incident response, and adopt security-by-default practices to reduce attack surfaces and strengthen trust across the software supply chain.

- Embrace preemptive cybersecurity. AI-driven techniques can anticipate and neutralize attacks before they occur. By 2030, 50% of security software spending is expected to go to preemptive cybersecurity solutions (PCS), and documented vulnerabilities are expected to surpass 1 million annually. Key capabilities of PCS include predictive threat intelligence, automated moving target defense, and advanced cyber deception. Adopting PCS reduces risk exposure and positions organizations for compliance as proactive defense becomes a universal requirement.

- Deploy AI security platforms (AISPs). AISPs consolidate controls to protect both third-party AI services and custom-built applications. They address AI-native risks such as prompt injection, rogue agent actions, and data leakage. Integrating AISPs into development pipelines ensures resilience and compliance with emerging regulations like the EU AI Act.

- Integrate post-quantum cryptography (PQC). To mitigate quantum threats, organizations should adopt quantum-resistant algorithms standardized by NIST, such as CRYSTALS-Kyber and CRYSTALS-Dilithium. Early PQC adoption protects long-lived data and ensures compliance with emerging legal and regulatory requirements.

Related project

From Vulnerable to Bulletproof: Securing Intellectual Property Through Reverse Engineering

Explore how our team used reverse engineering to enhance IP protection, fortify security controls, and mitigate risks for a technology-driven business.

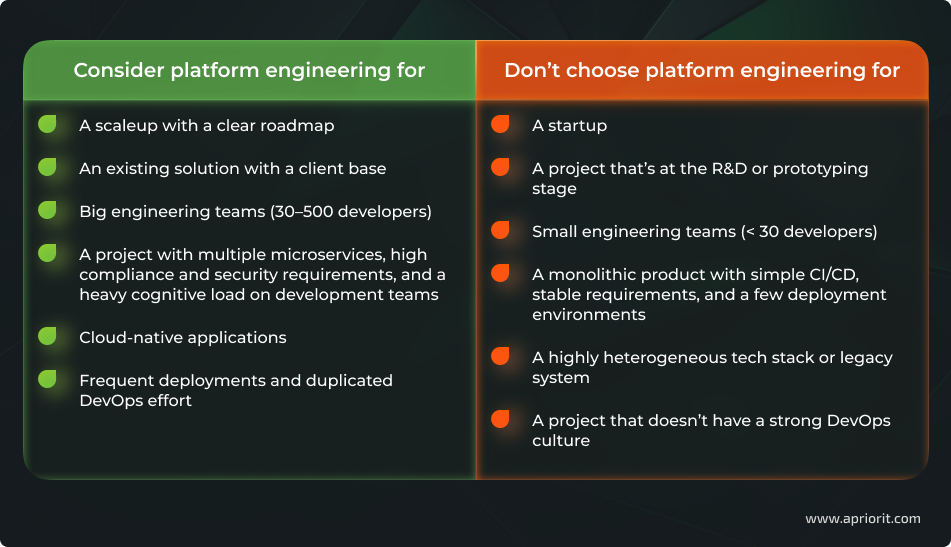

3. Who may benefit from adopting a platform engineering approach (and how)

The idea of platform engineering is to build and maintain internal development platforms that provide:

- Standardized environments

- Services for application deployment and database management

- Automated CI/CD pipelines

- Infrastructure templates (infrastructure-as-code)

- Logging and monitoring tools

- API gateways

- Security policies and secret handling services

According to Google Cloud, 55% of surveyed global organizations have already adopted platform engineering.

A well-known example of this trend is Spinnaker by Netflix and Google. This open-source continuous delivery platform automates software deployment automation, helps engineers deploy microservices, and shapes multi-cloud strategies.

Is this software development innovation suitable for your project? Let’s break down some pros and cons.

Pros:

- Internal development platforms offer simple interfaces, allowing developers to work faster and more easily without spending time and effort figuring out complex infrastructure.

- Since every team member uses the same deployment patterns, all parts of your software project will be consistent, reducing incompatibility and other efficiency risks.

- Such platforms provide opportunities to enforce security standards centrally, making sure all developers stick to chosen cybersecurity standards.

Cons:

- This approach requires resources for a dedicated team, months of development, ongoing maintenance, and infrastructure costs. For small companies, this becomes a massive investment with unclear ROI.

- There’s a risk of overengineering with unnecessary tools, templates, and too many abstractions.

- Custom development platforms can become a bottleneck for innovation, as every change (such as a new language or new cloud service) must go through the platform team first before the project team can proceed.

4. Consider choosing high-performance languages like Rust and Go

Although popular languages like Python, C/C++, and Java remain in leading positions in the TIOBE Index (as of December 2025), Rust and Go are continually improving their ratings and have made it into the top 20.

At Apriorit, we see rising interest in Rust and Go, which is understandable as both of these languages can be used to develop high-performance software.

Rust

Rust has been voted the most beloved language in the latest StackOverflow survey for a reason. Software engineers often mention that this is a memory-safe language, meaning it reduces memory-related and security risks.

Meanwhile, the latest State of Rust survey shows that 38% of respondents were using Rust at work for the majority of their coding in December 2024, versus 34% in the previous year. Moreover, 45% of respondents said that their organization was making non-trivial use of Rust.

Rust is praised for its speed, security, and performance, fairly winning its place in the list of software development trends for 2026. Project leaders often choose this language for the following reasons:

- Software written in Rust consumes less energy than the same solution written in JavaScript or C# due to memory safety and performance optimization.

- Rust fits different use cases, from device drivers to cloud infrastructure, as it offers strong safety, predictable performance, and modern tooling.

- Thanks to interoperability features, Rust can be easily added to projects written in other programming languages.

- Due to Rust’s memory safety, garbage collection, compile-time error detection, and interoperability capabilities, it’s a great choice for IoT projects.

Golang (Go)

According to the 2024 Go Developer Survey, the majority of respondents develop with Go on Linux (61%) and macOS (59%).

Together with Python, Go is desirable for developers working on high-performance systems programming, as highlighted in the 2025 StackOverflow survey results.

Golang was built for projects related to networking and infrastructure but is now used for a variety of applications, from cloud-based apps and DevOps to AI and robotics. Here are a few of the main reasons you should consider using this language in your projects:

- Golang works well for building scalable microservices, successfully handling limitations inherent to microservice architectures including architectural complexity, network latency, and security vulnerabilities.

- Go is supported by a rich ecosystem of libraries and tools, which makes it relatively easy for your team to enhance your software with additional functionality.

- This language is highly portable, helping developers build cross-platform applications.

- Golang is a popular choice for modern cloud-based development, especially since it offers ease of deployment and convenient APIs and SDKs.

Read also

Effective Load Testing for Golang Microservices: A Practical Guide

Unlock performance gains for your Golang microservices by following proven load testing approaches that reveal system limits and optimize throughput without guesswork.

5. Clouds and containers are shifting from being relevant to being necessary

Businesses continue to prioritize cloud adoption as it accelerates development, offers global availability, and reduces operational overhead compared to on-premises systems.

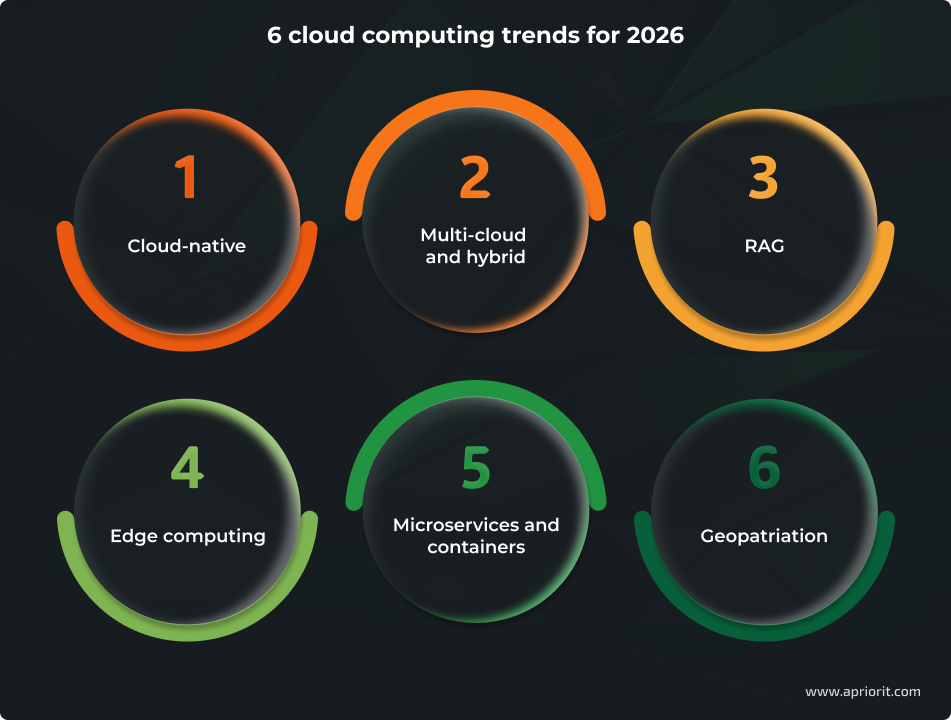

Let’s break down a few cloud development trends for 2026 that are worth your attention:

1. Cloud-native will be the baseline expectation in 2026 and no longer a “modern” approach. Technologies like Kubernetes, microservices, and service meshes are now standard for building scalable and resilient applications. This approach enables faster deployments, better resource utilization, and easier scaling. Software engineers should be ready to focus on horizontal scalability, failure tolerance, and automation from day one.

2. Multi-cloud and hybrid architectures will evolve from strategy to operational reality. Hybrid models balance existing on-premises systems with cloud services for greater flexibility and gradual modernization. Many projects adopt multi-cloud approaches to optimize costs and services across providers. And the latest surveys prove only growing interest in hybrid and multi-cloud deployments:

Hybrid and multi-cloud deployments are continuing to increase in popularity, used by 30% and 23% of developers, respectively.

[…]

77% of backend developers are using at least one technology strongly associated with cloud native approaches.

Source: State of Cloud Native Development Q3 2025 report by SlashData and the CNCF

3. Retrieval-Augmented Generation (RAG) is a cloud-dependent AI innovation. Implementing RAG at scale requires massive compute power, distributed storage, and low-latency access to enterprise data — and only cloud platforms can offer such capabilities.

RAG is already widely adopted by hyperscalers. Google Cloud, Azure, AWS, and Alibaba Cloud offer RAG services, signaling its importance in enterprise cloud and AI development. Modern cloud applications leverage this approach to improve the user experience, as RAG helps to minimize contradictions and inconsistencies in texts generated by LLMs based on retrieved knowledge.

4. Edge computing becomes a practical extension of the cloud, as it helps organizations push processing closer to data sources. This reduces latency and bandwidth costs, which is essential for projects with real-time data processing such as autonomous vehicles, IoT, and healthcare monitoring. Coupled with edge AI, devices equipped with specialized chips can perform inference locally, improving speed and privacy while reducing reliance on centralized cloud resources.

5. Microservices and containers go hand in hand with cloud computing development. According to Docker’s 2025 State of Application Development Report, 92% of IT professionals reported using containers in their workflows, up from 80% in 2024. Software engineers tend to choose microservices and containers for faster CI/CD and better resilience. Microservices allow teams to scale individual components independently, speeding up feature delivery and reducing downtime. And containers ensure consistent environments across development, testing, and production, making multi-cloud and hybrid deployments easier.

6. Geopatriation is the relocation of workloads from global hyperscale clouds to sovereign or local environments. By 2030, 75% of enterprises are expected to have geopatriate workloads. Also, a Gartner survey reveals that 61% of CIOs and IT leaders in Western Europe will increase reliance on local cloud providers due to geopolitics.

For local cloud providers, this could mean a larger workload and more clients. And for developers, this shift introduces both challenges and opportunities. On the upside, geopatriation increases the demand for skills in multi-cloud development. On the downside, applications may need to be re-architected to comply with local data residency laws, security standards, and regulatory frameworks. Also, additional work may be necessary to adapt existing code to new cloud environments, integrate software with region-specific APIs, and ensure interoperability across multiple platforms.

Read also

SaaS Migration Strategy: Why, When, and How to Migrate Your On-premises Product

Explore a comprehensive step-by-step guide to migrating legacy systems to SaaS, packed with practical advice, risk mitigation tactics, and planning frameworks that help you deliver faster and with fewer surprises.

A few more trends to keep an eye on

Of course, the trends we’ve analyzed above are just the tip of the iceberg.

Let’s take a look at a few more trends that reflect shifts in cost governance, data strategy, compliance, infrastructure scale, language choice, and modernization practices:

- FinOps is the merging of finance, engineering, and operations to optimize cloud and technology spend. And as cloud and AI budgets surge, FinOps is becoming essential. By 2026, FinOps will be pivotal to delivering financial accountability and efficiency across tech investments.

- Data mesh decentralizes data architecture by making domain teams owners of “data products,” avoiding the bottlenecks of centralized data lakes. Domain-aligned ownership speeds up analytics, enhances data quality, and reduces IT backlogs.

- The IoT ecosystem is rapidly scaling, as the number of connected IoT devices is expected to hit 39 billion by 2030 (growing at a 13.2% CAGR). With rising interest in devices and wearables, it’s no surprise that we expect further demand for skilled IoT developers. There’s a need for specialists who are able to build scalable and secure applications that can manage the influx of data from IoT devices, as well as for engineers who can analyze existing firmware and build custom firmware.

- Java has successfully gone through its renaissance on the JVM. The 2025 Jakarta EE Developer Survey [PDF] indicates a rapid shift toward newer Java 17 LTS versions. It reports Java 21 adoption rose to 43%, while Java 17 and Java 11 remain widely used in enterprise environments. This positions Java as a robust, AI-ready, cloud-native enterprise language in 2026.

- Legacy systems can now be modernized with AI. Enterprises are increasingly adapting legacy systems using deep modernization assessments, containerization, microservices, and AI-assisted code refactoring.

- Digital provenance verifies the origin and integrity of software, data, and AI-generated content using tools like SBOMs, attestation databases, and watermarking. Establishing digital provenance is critical because of rising risks from code tampering, abandoned open-source projects, and deepfake-driven disinformation. Regulatory mandates like the EU AI Act also require provenance tracking and watermarking for AI content.

Stay on top of the latest trends with Apriorit

A proven way to efficiently implement emerging software development trends in your solution is by outsourcing such tasks to qualified engineers who already have real-life experience with cutting-edge technologies.

At Apriorit, we help tech leaders turn emerging trends into competitive advantages. Our business analysts and technical specialists choose only the most relevant and suitable technologies that can bring actual value.

Here’s how our services can support your 2026 strategy:

- Develop interactive chatbots, text processing modules, and image detection solutions and smoothly integrate them in your products

- Build custom cybersecurity solutions, from MVPs to comprehensive platforms, and provide functionality for efficient vulnerability detection and management

- Develop custom secrets management functionality and enhance the security of complex solutions like MDM software

- Speed up microservices development with code generation

- Assist with preparing software for post-quantum cryptography

- Build efficient and scalable multi-cloud solutions

- Deliver top-notch and secure SaaS products that scale

Revamping your software using these technologies is a great way to make your product more efficient and more attractive to your target audience.

Now is the time to assess your development practices, invest in AI-driven tools, and strengthen your security posture. Apriorit engineers are ready to help with whatever tasks and strategy you choose.

Need help incorporating new trends in your software project?

We’ll choose the best-fitting technologies for your project and smoothly and securely implement them in your solution.

FAQ

What are the most impactful software development trends for 2026?

<p>In 2026, lots of evolving technologies are expected to empower software development processes. We expect the key software development trends to include:</p>

<ul class=apriorit-list-markers-green>

<li>AI-assisted development</li>

<li>Advanced cybersecurity practices</li>

<li>Platform engineering</li>

<li>Increased adoption of high-performance languages like Rust and Go</li>

<li>Cloud-native architectures powered with microservices, RAG, and edge computing</li>

</ul>

<p>Emerging concepts such as FinOps, data mesh, and geopatriation will also shape strategies. All of these trends aim to improve scalability, security, and cost efficiency while enabling faster delivery and compliance with evolving laws and regulations.</p>

How can AI tools improve software development efficiency and reduce costs?

<p>Integrating AI into software development workflows can help your team:</p>

<ul class=apriorit-list-markers-green>

<li>Generate code efficiently by quickly creating standard functions and snippets</li>

<li>Automate test creation by suggesting test cases based on code structure</li>

<li>Verify that test coverage aligns with business needs</li>

<li>Accelerate debugging by analyzing error patterns, identifying potential causes, and reducing time spent on troubleshooting</li>

<li>Simplify documentation creation by generating technical docs from comments and signatures</li>

<li>Speed up cross-language development processes by translating software code across languages</li>

<li>Improve code quality by reviewing existing code and recommending improvements for performance and maintainability</li>

<li>Support safe prototyping by synthesizing data for testing without exposing sensitive real-world information</li>

</ul>

<p>When using relevant AI tools and technologies, engineers gain more time to focus on architectural decisions and non-trivial tasks. This shortens the time to market and lowers development costs. However, AI works best as an assistant, not a replacement. Development teams must address risks such as data leaks, bias, and compliance to maximize ROI.</p>

When should a company adopt platform engineering, and what are the pros and cons?

<p>Platform engineering is one of the top software development trends in 2026. It’s ideal for scale-ups and enterprises with large teams, complex microservices, and strict compliance needs. This approach centralizes infrastructure, CI/CD, and security policies, improving consistency and developer productivity. Pros include faster deployments and reduced cognitive load.</p>

<p>However, startups, R&D projects, and small businesses may find it unnecessary. Skip platform engineering if your infrastructure is relatively simple, your teams don’t deploy often, your project only includes a few services, or you’re still figuring out your product direction. Cons include high upfront investment, ongoing maintenance, and the risk of overengineering.</p>

Why are Rust and Go considered high-performance languages, and which projects will they benefit most?

<p>Rust is ideal for IoT, embedded systems, and cloud infrastructure, as it offers:</p>

<ul class=apriorit-list-markers-green>

<li>Memory safety</li>

<li>Predictable performance</li>

<li>Energy efficiency</li>

<li>Zero-cost abstractions</li>

<li>A strong concurrency model</li>

</ul>

<p>Go excels in building scalable microservices, networking tools, and cloud-native applications thanks to its:</p>

<ul class=apriorit-list-markers-green>

<li>Simplicity</li>

<li>Concurrency support</li>

<li>Fast compilation for rapid development cycles</li>

<li>Rich standard library</li>

<li>Built-in garbage collection</li>

</ul>

<p>Both Rust and Go reduce runtime errors and improve security, making them strong choices for performance-critical and distributed systems.</p>

What cybersecurity practices should you embed in the software development lifecycle in 2026?

<p>Key practices include:</p>

<ul class=apriorit-list-markers-green>

<li>Secure SDLC principles</li>

<li>DevSecOps integration</li>

<li>Zero trust architecture</li>

<li>SBOM generation</li>

<li>Regular security audits</li>

</ul>

<p>Advanced measures like AI-driven threat detection, post-quantum cryptography, and observability platforms are gaining traction. Aligning with frameworks such as the NIST Cybersecurity Framework and the Secure by Design pledge ensures compliance and resilience against evolving threats.</p>

What does geopatriation mean for cloud strategy, and how will it affect multi-cloud deployments?

<p>Geopatriation refers to moving workloads from global hyperscale clouds to local or sovereign environments. By 2030, most enterprises are expected to adopt this approach due to regulatory requirements, geopolitical reasons, and security considerations.</p>

<p>It’s likely that geopatriation will increase demand for multi-cloud skills and compliance expertise, as applications may need to be re-architected to support local data residency laws and interoperability across diverse platforms.</p>